Difference between revisions of "2016:Discovery of Repeated Themes & Sections Results"

Tom Collins (talk | contribs) m |

Tom Collins (talk | contribs) m (→Discussion) |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 22: | Line 22: | ||

== Ground Truth and Algorithms == | == Ground Truth and Algorithms == | ||

| − | The ground truth, called the '''Johannes Kepler University Patterns Test Database''' (JKUPTD-Aug2013), is based on motifs and themes in Barlow and Morgenstern (1953), Schoenberg (1967), and Bruhn (1993). Repeated sections are based on those marked by the composer. These annotations are supplemented with some of our own where necessary. A Development Database (JKUPDD-Aug2013) enabled participants to try out their algorithms. For each piece in the Development and Test Databases, symbolic and synthesised audio versions are crossed with monophonic and polyphonic versions, giving four versions of the task in total: symPoly, symMono, audPoly, and audMono. There were no submissions to the | + | The ground truth, called the '''Johannes Kepler University Patterns Test Database''' (JKUPTD-Aug2013), is based on motifs and themes in Barlow and Morgenstern (1953), Schoenberg (1967), and Bruhn (1993). Repeated sections are based on those marked by the composer. These annotations are supplemented with some of our own where necessary. A Development Database (JKUPDD-Aug2013) enabled participants to try out their algorithms. For each piece in the Development and Test Databases, symbolic and synthesised audio versions are crossed with monophonic and polyphonic versions, giving four versions of the task in total: symPoly, symMono, audPoly, and audMono. There were no submissions to the audPoly or audMono categories this year, so two versions of the task ran. Submitted algorithms are shown in Table 1. |

| Line 34: | Line 34: | ||

|- style="background: green;" | |- style="background: green;" | ||

! Task Version | ! Task Version | ||

| − | ! | + | ! symPoly |

! | ! | ||

! | ! | ||

|- | |- | ||

| − | ! | + | ! DM1 |

| − | | | + | | SIATECCompress-TLF1 || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM1.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

| − | |||

| − | |||

| − | |||

|- | |- | ||

| − | ! | + | ! DM2 |

| − | | | + | | SIATECCompress-TLP || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM2.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

|- | |- | ||

| − | ! | + | ! DM3 |

| − | | | + | | SIATECCompress-TLR || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM3.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

|- | |- | ||

|- style="background: green;" | |- style="background: green;" | ||

! Task Version | ! Task Version | ||

| − | ! | + | ! symMono |

! | ! | ||

! | ! | ||

|- | |- | ||

| − | ! | + | ! DM1 |

| − | | | + | | SIATECCompress-TLF1 || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM1.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

|- | |- | ||

| − | ! | + | ! DM2 |

| − | | | + | | SIATECCompress-TLP || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM2.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

|- | |- | ||

| − | ! | + | ! DM3 |

| − | | | + | | SIATECCompress-TLR || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/DM3.pdf PDF] || [http://www.titanmusic.com/ David Meredith] |

|- | |- | ||

| + | ! IR1 | ||

| + | | mypattern || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/IR1.pdf PDF] || [https://sites.google.com/site/irisyupingren/home/ Iris YuPing Ren] | ||

| + | |- | ||

| + | ! PLM1 | ||

| + | | SYMCHM || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/PLM1.pdf PDF] || [http://musiclab.si/ Matevž Pesek], Urša Medvešek, [http://www.cs.bham.ac.uk/~leonarda/ Aleš Leonardis], [http://www.fri.uni-lj.si/en/matija-marolt Matija Marolt] | ||

| + | |- | ||

| + | ! VM1'14 | ||

| + | | VM1 || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/VM1.pdf PDF] || [http://personprofil.aau.dk/128103 Gissel Velarde], [http://www.titanmusic.com/ David Meredith] | ||

| + | |- | ||

| + | ! VM2'14 | ||

| + | | VM2 || style="text-align: center;" | [https://www.music-ir.org/mirex/abstracts/2016/VM2.pdf PDF] || [http://personprofil.aau.dk/128103 Gissel Velarde], [http://www.titanmusic.com/ David Meredith] | ||

| + | |- | ||

|} | |} | ||

| − | '''Table 1.''' Algorithms submitted to DRTS | + | '''Table 1.''' Algorithms submitted to DRTS. |

| − | == Results | + | == Results == |

(For mathematical definitions of the various metrics, please see [[2015:Discovery_of_Repeated_Themes_&_Sections#Evaluation_Procedure]].) | (For mathematical definitions of the various metrics, please see [[2015:Discovery_of_Repeated_Themes_&_Sections#Evaluation_Procedure]].) | ||

| − | + | === symMono === | |

| + | |||

| + | We welcomed a new participant (Ren, 2016) to the symMono version of the task. All other researchers participated in previous years, but some (Meredith, 2016; Pesek, Leonardis, & Marolt, 2016) submitted new versions of algorithms. | ||

| + | |||

| + | To recap some information from previous years, the metrics that can be calculated on a per-pattern basis (establishment recall and occurrence recall) present opportunities to test for significant differences in performance between algorithms. For the remaining metrics, which can only be calculated on a per-piece basis, we do not test for significant differences because the sample size is too small. Still, however, trends in results are evident in the figures and tables below. For instance, in the symMono task version, VM1's (Velarde & Meredith, 2016) establishment F1 score outperforms all other algorithms apart from for one piece (Fig. 18). It is also the stand-out performer on establishment recall (Fig. 14). IR1 does particularly well for some of the smaller/shorter patterns in piece 5 (Fig. 14), which other algorithms seem to miss. | ||

| + | |||

| + | Application of Friedman's test to establishment recall per pattern results revealed a significant main effect of algorithm (<math>\chi^2(3) = 54.5062,\ p < .001</math>). Bonferroni-corrected, pairwise tests suggested the ordering VM1 > DM1 ~ IR1 > PLM1, where > denotes a significant difference and ~ denotes no significant difference. Application of Friedman's test to occurrence recall per pattern results revealed a significant main effect of algorithm (<math>\chi^2(3) = 54.5062,\ p < .001</math>). Bonferroni-corrected, pairwise tests suggested the ordering VM1 > DM1 ~ PLM1 ~ IR1. One of these tests was only borderline-significant (that between VM1 and PLM1), but this is probably due to averaging results for PLM1 on piece 5 (see below or Fig. 15). | ||

| + | |||

| + | With regards runtimes (Fig. 25), it should be noted that those for PLM1 and IR1 are somewhat harsh, because the submissions had to be run on slower machines than the Linux cluster on which the other submissions were ran. This is a function of programming language/operating system used by the researchers. After running for one week on piece 5 (the longest piece), PLM1 did not produce output, so was assigned mean values over the remaining pieces (Figs. 14 and 15). It was assigned the maximum runtime over the remaining pieces (Fig. 25). The task captain accepts some responsibility for such issues: he should have made the longest piece in the development database far longer than any piece in the test database! | ||

| + | |||

| + | PLM1, DM2, VM1, and VM2 output far fewer patterns than other algorithms (see the n_Q column in Table 4). One potential application of pattern discovery algorithms is for playback accompanied by a visualization of repetitive structure. The relatively few patterns output by these algorithms make them more feasible candidates for this application. | ||

| + | |||

| + | === symPoly === | ||

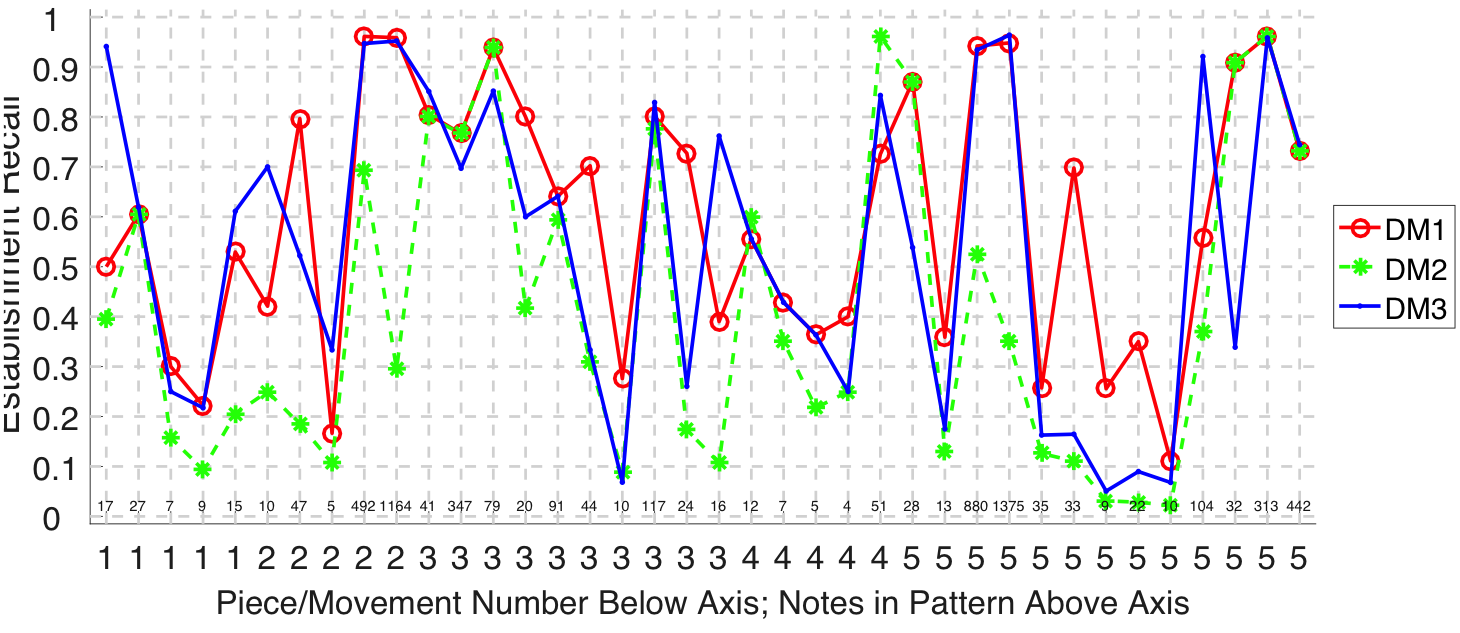

| − | + | Meredith (2016) submitted three algorithms to the symPoly task version, selected according to performance on three-layer F1, precision, and recall metrics, respectively. DM3 outperforms DM2 on establishment recall (<math>\chi^2(1) = 11.9189,\ p < .05</math>, Bonferroni corrected), which is perhaps to be expected because DM3 was submitted by Meredith (2016) on the basis of a recall metric, whereas DM2 was submitted on the basis of a precision metric. Similarly, DM1 outperforms DM2 on establishment recall (<math>\chi^2(1) = 23.5161,\ p < .005</math>, Bonferroni corrected). Again, this is not particularly surprising because DM1 was submitted on the basis of an F1 performance metric (which combines recall and precision), whereas DM2 was submitted on the basis of a precision metric. DM3 is generally higher than DM2 on three-layer recall, and DM2 is mostly higher than DM3 on three-layer precision. Both of these results make sense, given the basis on upon which these algorithms were submitted. | |

== Discussion == | == Discussion == | ||

| − | + | To be added post-ISMIR! | |

| − | + | I certainly have to step down as Task Captain next year, because I have too many other commitments. | |

| − | It | + | It has already been discussed that next year we may switch to new training and test databases that focus not directly on the discovery task itself, but on an application of pattern discovery that can be used as a proxy to evaluate the extent to which algorithms have retrieved relevant repetitive material. For example, a prediction task. This may also bring interest from deep learners and/or cognitive scientists, looking to build on previous participants' work, which would be great. If you are interested in helping out with the preparation of a new version of the task, you are welcome to [http://tomcollinsresearch.net/contact.html get in touch]. |

Tom Collins, | Tom Collins, | ||

| − | + | New York, 2016 | |

== Results in Detail == | == Results in Detail == | ||

| Line 104: | Line 113: | ||

=== symPoly === | === symPoly === | ||

| − | [[File: | + | [[File:01symPolyEstRecPerPatt2016.png|600px]] |

'''Figure 2.''' Establishment recall on a per-pattern basis. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? | '''Figure 2.''' Establishment recall on a per-pattern basis. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? | ||

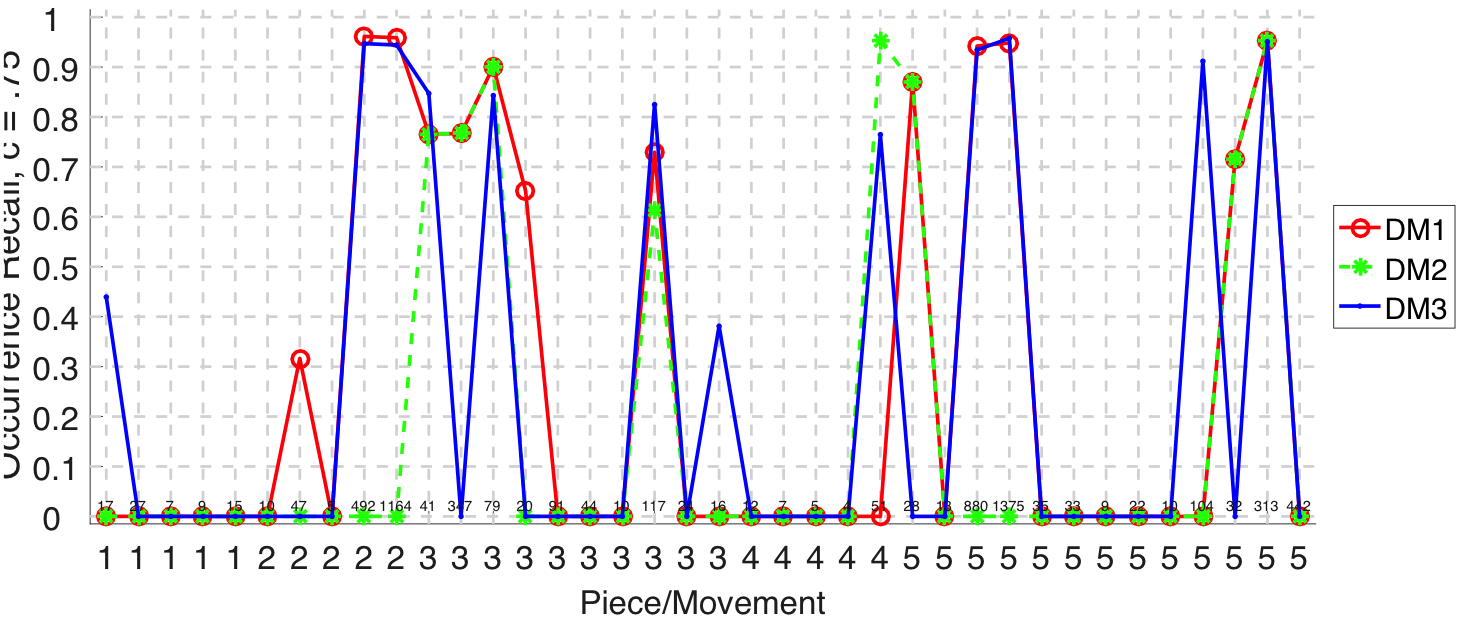

| − | [[File: | + | [[File:04symPolyOccRecPerPatt2016.png|600px]] |

'''Figure 3.''' Occurrence recall on a per-pattern basis. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? | '''Figure 3.''' Occurrence recall on a per-pattern basis. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? | ||

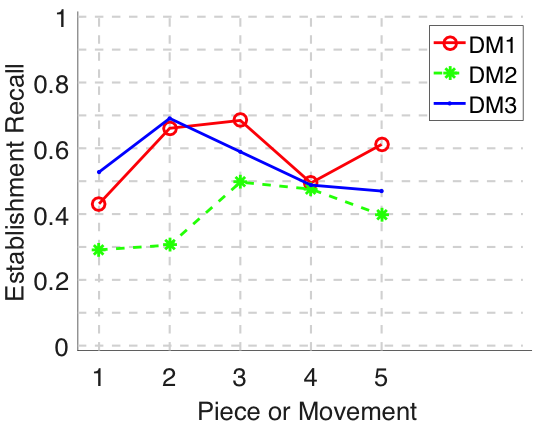

| − | [[File: | + | [[File:01symPolyEstRec2016.png|400px]] |

'''Figure 4.''' Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? | '''Figure 4.''' Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? | ||

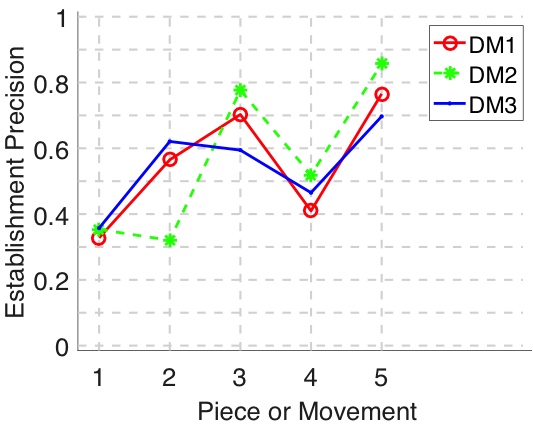

| − | [[File: | + | [[File:02symPolyEstPrec2016.png|400px]] |

'''Figure 5.''' Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern? | '''Figure 5.''' Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern? | ||

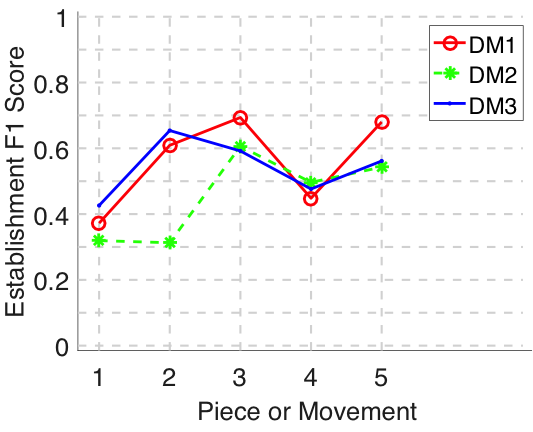

| − | [[File: | + | [[File:03symPolyEstF12016.png|400px]] |

'''Figure 6.''' Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall. | '''Figure 6.''' Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall. | ||

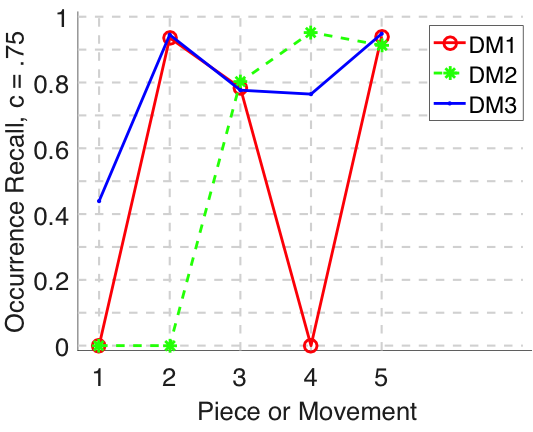

| − | [[File: | + | [[File:04symPolyOccRecP752016.png|400px]] |

'''Figure 7.''' Occurrence recall (<math>c = .75</math>) averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? | '''Figure 7.''' Occurrence recall (<math>c = .75</math>) averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? | ||

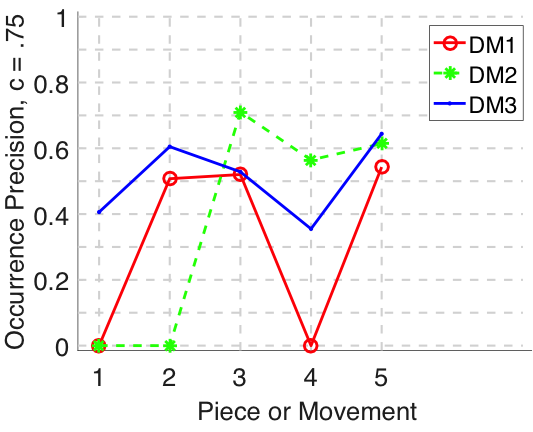

| − | [[File: | + | [[File:05symPolyOccPrecP752016.png|400px]] |

'''Figure 8.''' Occurrence precision (<math>c = .75</math>) averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences? | '''Figure 8.''' Occurrence precision (<math>c = .75</math>) averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences? | ||

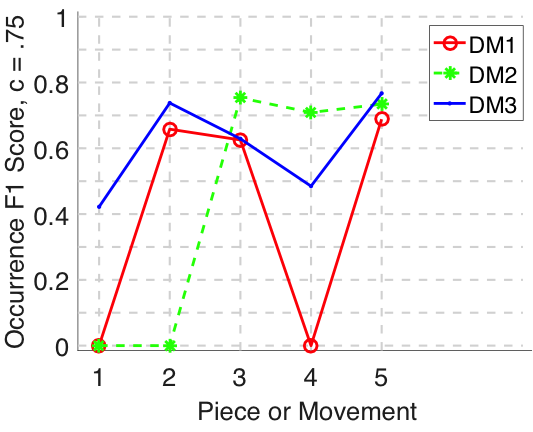

| − | [[File: | + | [[File:06symPolyOccF1P752016.png|400px]] |

'''Figure 9.''' Occurrence F1 (<math>c = .75</math>) averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall. | '''Figure 9.''' Occurrence F1 (<math>c = .75</math>) averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall. | ||

| − | [[File: | + | [[File:07symPolyR32016.png|400px]] |

'''Figure 10.''' Three-layer recall averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment recall), three-layer recall uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. | '''Figure 10.''' Three-layer recall averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment recall), three-layer recall uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. | ||

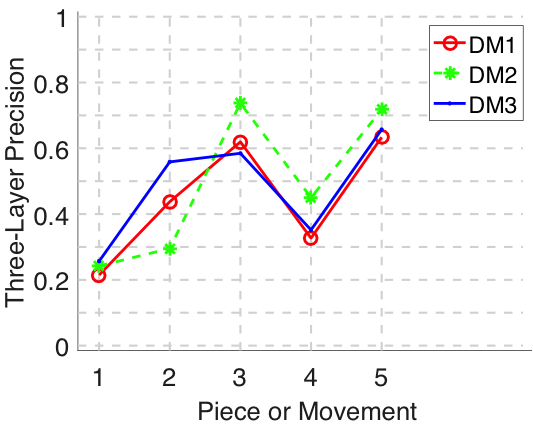

| − | [[File: | + | [[File:08symPolyP32016.png|400px]] |

'''Figure 11.''' Three-layer precision averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment precision), three-layer precision uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. | '''Figure 11.''' Three-layer precision averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment precision), three-layer precision uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. | ||

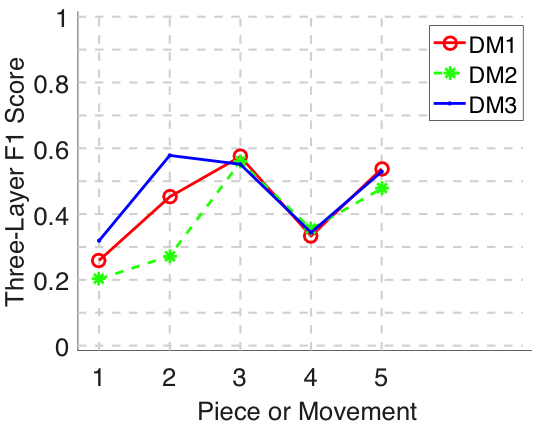

| − | [[File: | + | [[File:09symPolyTLF2016.png|400px]] |

'''Figure 12.''' Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall. | '''Figure 12.''' Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall. | ||

| Line 162: | Line 171: | ||

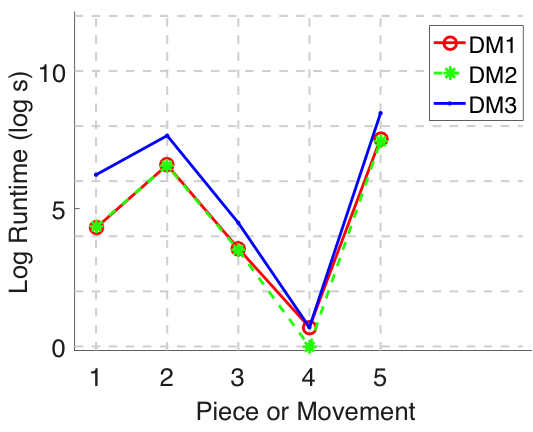

'''Figure 13.''' Log runtime of the algorithm for each piece/movement. | '''Figure 13.''' Log runtime of the algorithm for each piece/movement. | ||

| − | |||

=== symMono === | === symMono === | ||

| − | ''(Submission | + | ''(Submission PLM1 did not complete on piece 5. The task captain took the decision to assign the mean of the evaluation metrics for PLM1 calculated across the remaining pieces. Apart from runtime, in which case the maximum across the remaining pieces was assigned.)'' |

| − | [[File: | + | [[File:11symMonoEstRecPerPatt2016.png|600px]] |

| − | '''Figure | + | '''Figure 14.''' Establishment recall on a per-pattern basis. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? |

| − | [[File: | + | [[File:14symMonoOccRecPerPatt2016.png|600px]] |

| − | '''Figure | + | '''Figure 15.''' Occurrence recall on a per-pattern basis. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? |

| − | [[File: | + | [[File:11symMonoEstRec2016.png|400px]] |

| − | '''Figure | + | '''Figure 16.''' Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype? |

| − | [[File: | + | [[File:12symMonoEstPrec2016.png|400px]] |

| − | '''Figure | + | '''Figure 17.''' Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern? |

| − | [[File: | + | [[File:13symMonoEstF12016.png|400px]] |

| − | '''Figure | + | '''Figure 18.''' Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall. |

| − | [[File: | + | [[File:14symMonoOccRecP752016.png|400px]] |

| − | '''Figure | + | '''Figure 19.''' Occurrence recall (<math>c = .75</math>) averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set? |

| − | [[File: | + | [[File:15symMonoOccPrecP752016.png|400px]] |

| − | '''Figure | + | '''Figure 20.''' Occurrence precision (<math>c = .75</math>) averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences? |

| − | [[File: | + | [[File:16symMonoOccF1P752016.png|400px]] |

| − | '''Figure | + | '''Figure 21.''' Occurrence F1 (<math>c = .75</math>) averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall. |

| − | [[File: | + | [[File:17symMonoR32016.png|400px]] |

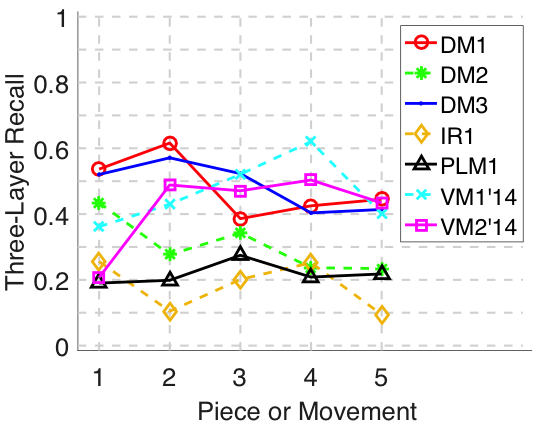

| − | '''Figure | + | '''Figure 22.''' Three-layer recall averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment recall), three-layer recall uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. |

| − | [[File: | + | [[File:18symMonoP32016.png|400px]] |

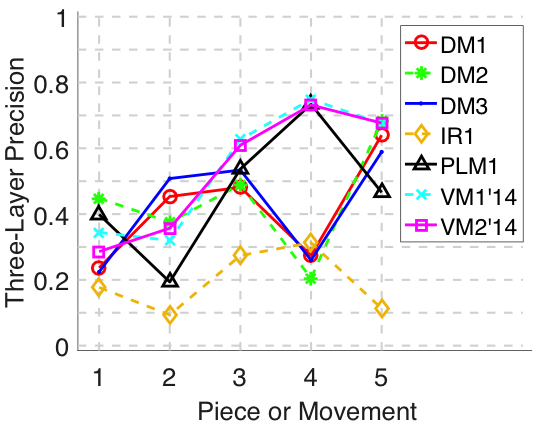

| − | '''Figure | + | '''Figure 23.''' Three-layer precision averaged over each piece/movement. Rather than using <math>|P \cap Q|/\max\{|P|, |Q|\}</math> as a similarity measure (which is the default for establishment precision), three-layer precision uses <math>2|P \cap Q|/(|P| + |Q|)</math>, which is a kind of F1 measure. |

| − | [[File: | + | [[File:19symMonoTLF2016.png|400px]] |

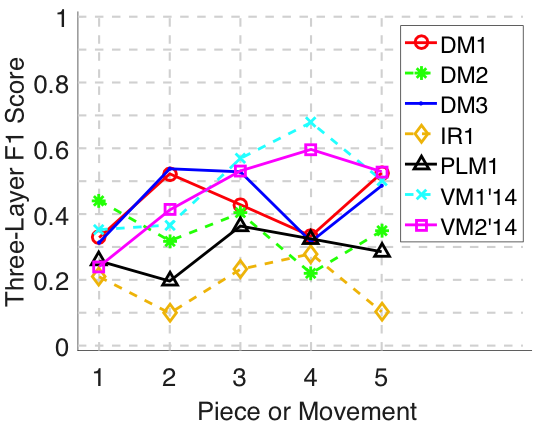

| − | '''Figure | + | '''Figure 24.''' Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall. |

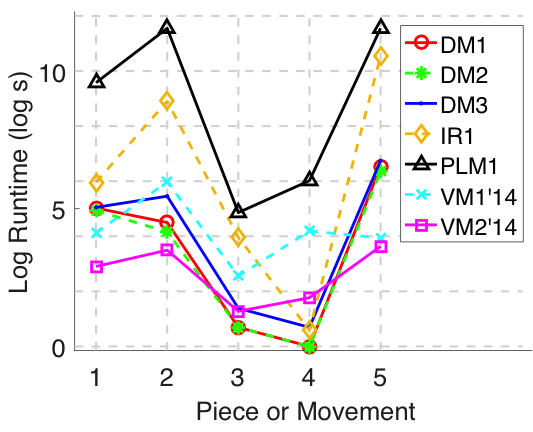

| − | [[File: | + | [[File:20symMonoRuntime2016.png|400px]] |

| − | |||

| − | |||

| + | '''Figure 25.''' Log runtime of the algorithm for each piece/movement. | ||

== Tabular Versions of Plots == | == Tabular Versions of Plots == | ||

| − | === | + | === symPoly === |

| − | <csv p=3> | + | <csv p=3>2016/drts/symPolyResults2016.csv</csv> |

'''Table 2.''' Tabular version of Figures 4-13. | '''Table 2.''' Tabular version of Figures 4-13. | ||

| − | <csv p=3> | + | <csv p=3>2016/drts/symMonoResultsPerPatt2016.csv</csv> |

'''Table 3.''' Tabular version of Figures 2 and 3. | '''Table 3.''' Tabular version of Figures 2 and 3. | ||

| − | |||

| − | <csv p=3> | + | === symMono === |

| + | |||

| + | <csv p=3>2016/drts/symMonoResults2016.csv</csv> | ||

| − | '''Table 4.''' | + | '''Table 4.''' Tabular version of Figures 16-25. |

| − | <csv p=3> | + | <csv p=3>2016/drts/symMonoResultsPerPatt2016.csv</csv> |

'''Table 5.''' Tabular version of Figures 14 and 15. | '''Table 5.''' Tabular version of Figures 14 and 15. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== References == | == References == | ||

Latest revision as of 07:59, 11 August 2016

THIS PAGE IS UNDER CONSTRUCTION

Contents

Introduction

The task: algorithms take a piece of music as input, and output a list of patterns repeated within that piece. A pattern is defined as a set of ontime-pitch pairs that occurs at least twice (i.e., is repeated at least once) in a piece of music. The second, third, etc. occurrences of the pattern will likely be shifted in time and/or transposed, relative to the first occurrence. Ideally an algorithm will be able to discover all exact and inexact occurrences of a pattern within a piece, so in evaluating this task we are interested in both:

- (1) to what extent an algorithm can discover one occurrence, up to time shift and transposition, and;

- (2) to what extent it can find all occurrences.

The metrics establishment recall, establishment precision and establishment F1 address (1), and the metrics occurrence recall, occurrence precision, and occurrence F1 address (2).

Contribution

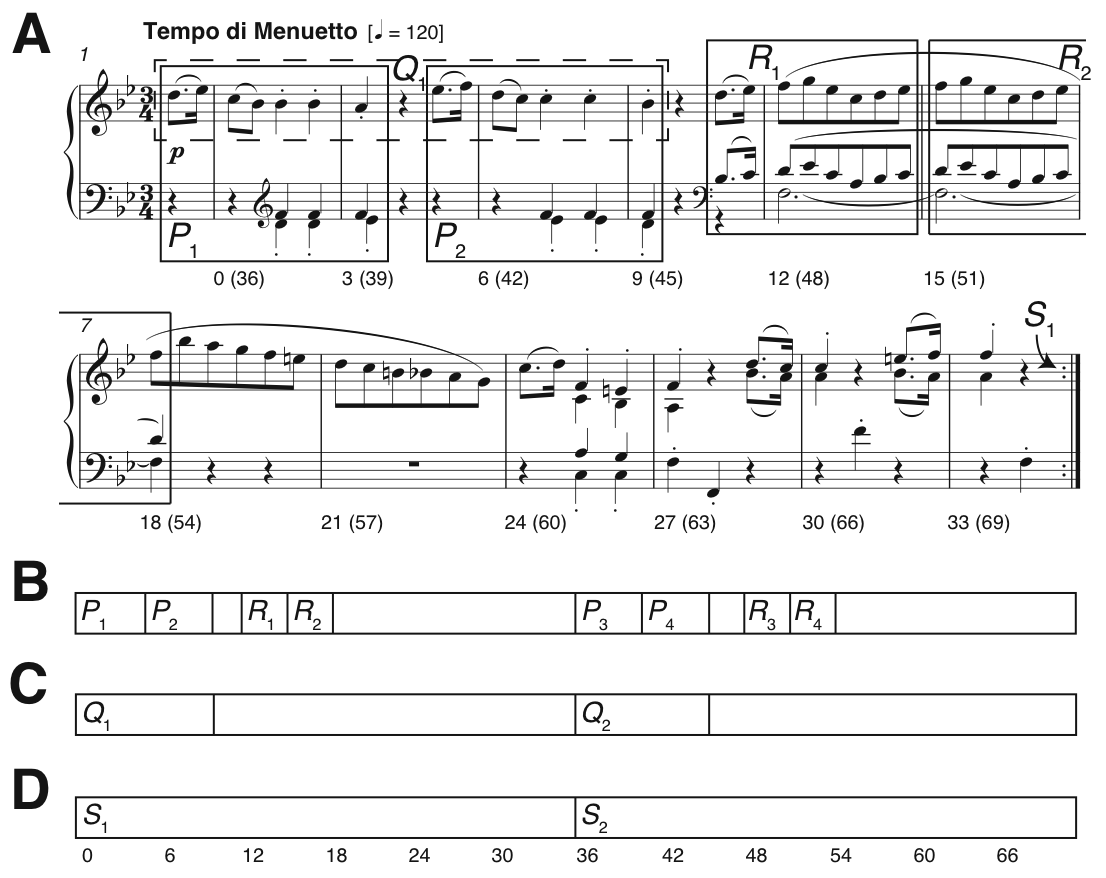

Existing approaches to music structure analysis in MIR tend to focus on segmentation (e.g., Weiss & Bello, 2010). The contribution of this task is to afford access to the note content itself (please see the example in Fig. 1A), requiring algorithms to do more than label time windows (e.g., the segmentations in Figs. 1B-D). For instance, a discovery algorithm applied to the piece in Fig. 1A should return a pattern corresponding to the note content of and , as well as a pattern corresponding to the note content of . This is because occurs again independently of the accompaniment in bars 19-22 (not shown here). The ground truth also contains nested patterns, such as in Fig. 1A being a subset of the sectional repetition , reflecting the often-hierarchical nature of musical repetition. While we recognise the appealing simplicity of linear segmentation, in the Discovery of Repeated Themes & Sections task we are demanding analysis at a greater level of detail, and have built a ground truth that contains overlapping and nested patterns (Collins et al., 2014).

Figure 1. Pattern discovery v segmentation. (A) Bars 1-12 of Mozart’s Piano Sonata in E-flat major K282 mvt.2, showing some ground-truth themes and repeated sections; (B-D) Three linear segmentations. Numbers below the staff in Fig. 1A and below the segmentation in Fig. 1D indicate crotchet beats, from zero for bar 1 beat 1.

For a more detailed introduction to the task, please see 2015:Discovery_of_Repeated_Themes_&_Sections.

Ground Truth and Algorithms

The ground truth, called the Johannes Kepler University Patterns Test Database (JKUPTD-Aug2013), is based on motifs and themes in Barlow and Morgenstern (1953), Schoenberg (1967), and Bruhn (1993). Repeated sections are based on those marked by the composer. These annotations are supplemented with some of our own where necessary. A Development Database (JKUPDD-Aug2013) enabled participants to try out their algorithms. For each piece in the Development and Test Databases, symbolic and synthesised audio versions are crossed with monophonic and polyphonic versions, giving four versions of the task in total: symPoly, symMono, audPoly, and audMono. There were no submissions to the audPoly or audMono categories this year, so two versions of the task ran. Submitted algorithms are shown in Table 1.

| Sub code | Submission name | Abstract | Contributors |

|---|---|---|---|

| Task Version | symPoly | ||

| DM1 | SIATECCompress-TLF1 | David Meredith | |

| DM2 | SIATECCompress-TLP | David Meredith | |

| DM3 | SIATECCompress-TLR | David Meredith | |

| Task Version | symMono | ||

| DM1 | SIATECCompress-TLF1 | David Meredith | |

| DM2 | SIATECCompress-TLP | David Meredith | |

| DM3 | SIATECCompress-TLR | David Meredith | |

| IR1 | mypattern | Iris YuPing Ren | |

| PLM1 | SYMCHM | Matevž Pesek, Urša Medvešek, Aleš Leonardis, Matija Marolt | |

| VM1'14 | VM1 | Gissel Velarde, David Meredith | |

| VM2'14 | VM2 | Gissel Velarde, David Meredith |

Table 1. Algorithms submitted to DRTS.

Results

(For mathematical definitions of the various metrics, please see 2015:Discovery_of_Repeated_Themes_&_Sections#Evaluation_Procedure.)

symMono

We welcomed a new participant (Ren, 2016) to the symMono version of the task. All other researchers participated in previous years, but some (Meredith, 2016; Pesek, Leonardis, & Marolt, 2016) submitted new versions of algorithms.

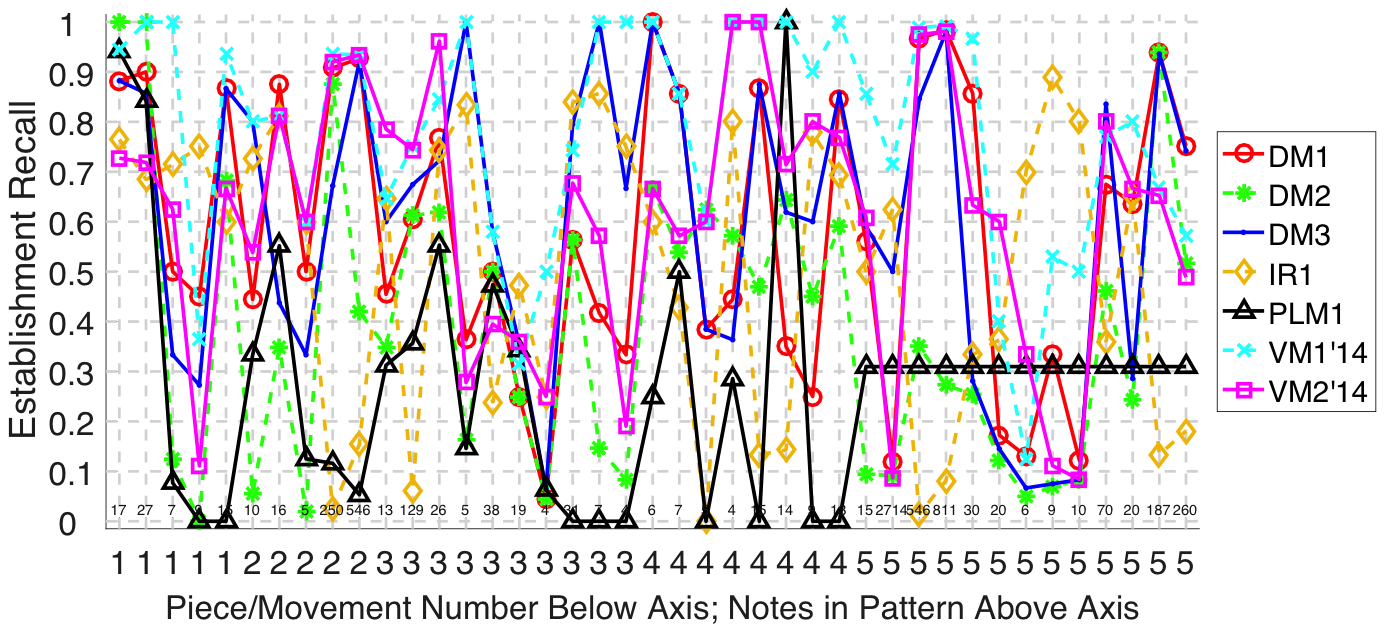

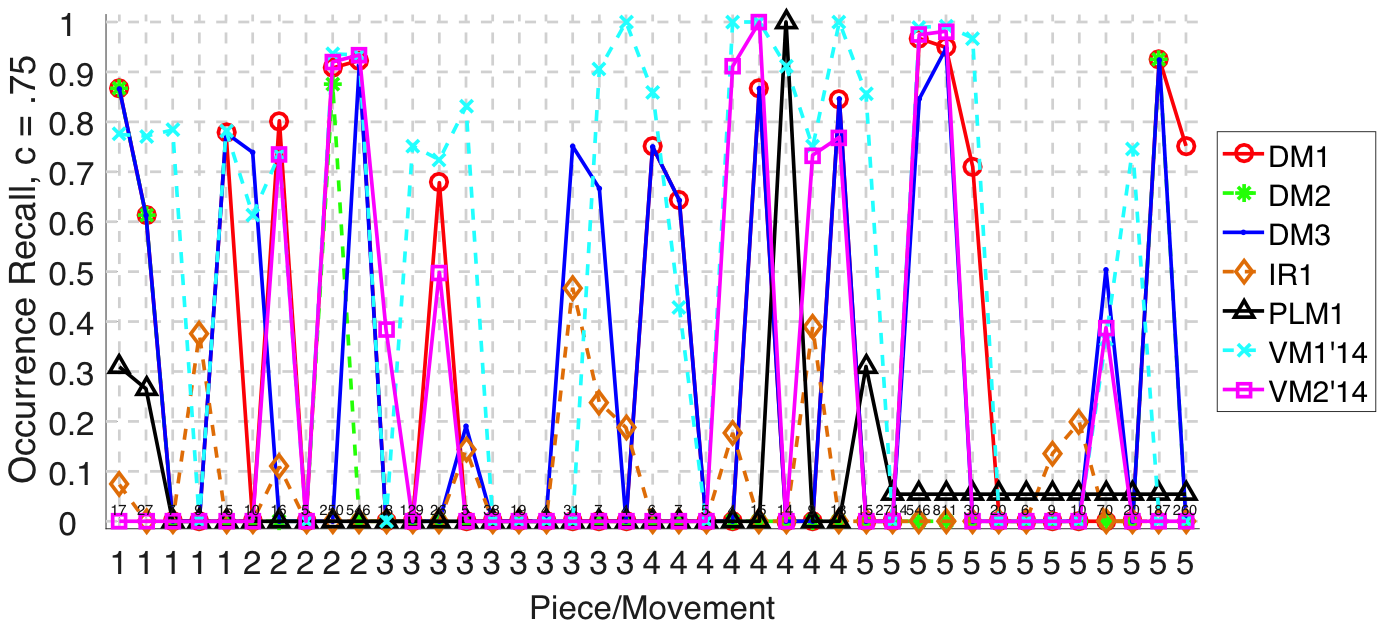

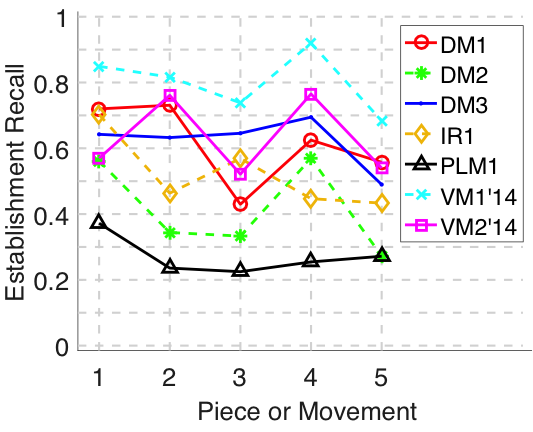

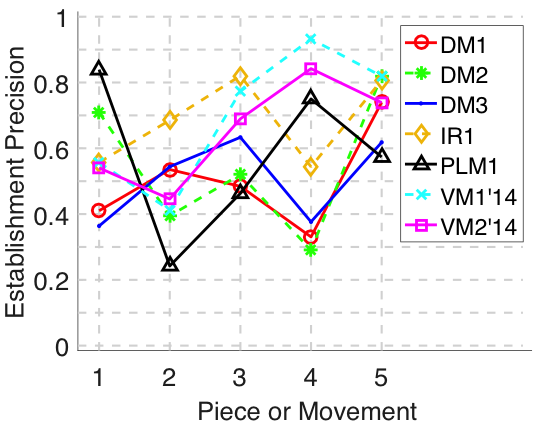

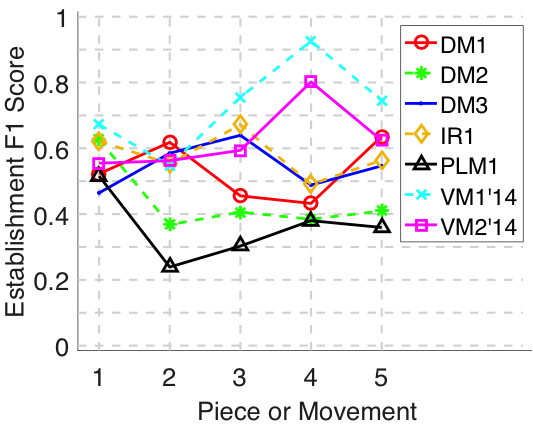

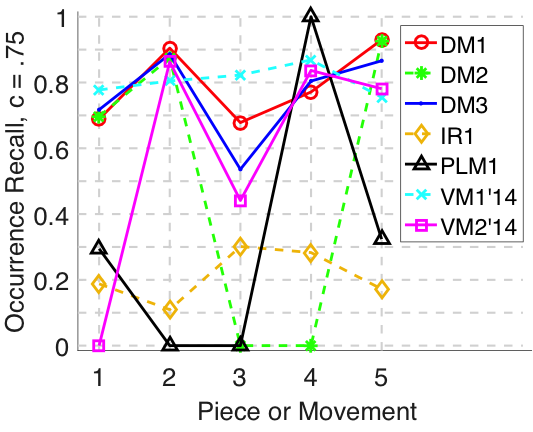

To recap some information from previous years, the metrics that can be calculated on a per-pattern basis (establishment recall and occurrence recall) present opportunities to test for significant differences in performance between algorithms. For the remaining metrics, which can only be calculated on a per-piece basis, we do not test for significant differences because the sample size is too small. Still, however, trends in results are evident in the figures and tables below. For instance, in the symMono task version, VM1's (Velarde & Meredith, 2016) establishment F1 score outperforms all other algorithms apart from for one piece (Fig. 18). It is also the stand-out performer on establishment recall (Fig. 14). IR1 does particularly well for some of the smaller/shorter patterns in piece 5 (Fig. 14), which other algorithms seem to miss.

Application of Friedman's test to establishment recall per pattern results revealed a significant main effect of algorithm (). Bonferroni-corrected, pairwise tests suggested the ordering VM1 > DM1 ~ IR1 > PLM1, where > denotes a significant difference and ~ denotes no significant difference. Application of Friedman's test to occurrence recall per pattern results revealed a significant main effect of algorithm (). Bonferroni-corrected, pairwise tests suggested the ordering VM1 > DM1 ~ PLM1 ~ IR1. One of these tests was only borderline-significant (that between VM1 and PLM1), but this is probably due to averaging results for PLM1 on piece 5 (see below or Fig. 15).

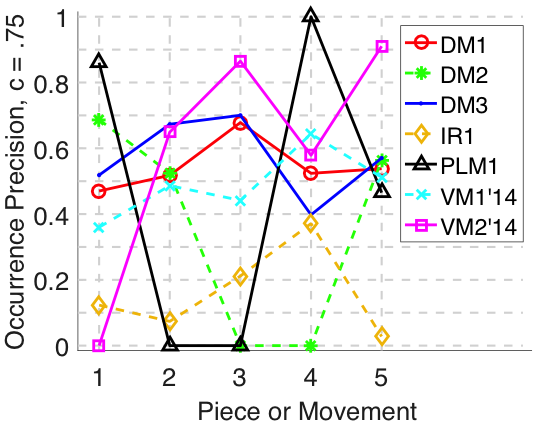

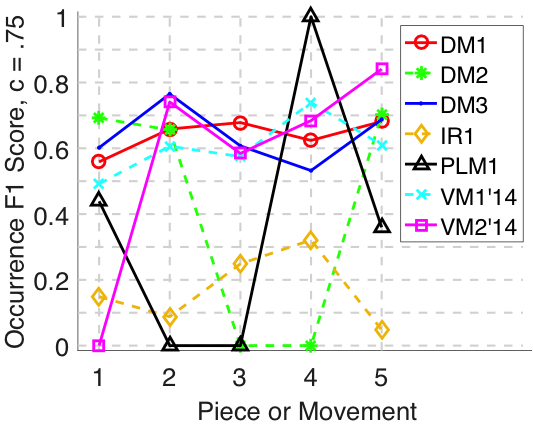

With regards runtimes (Fig. 25), it should be noted that those for PLM1 and IR1 are somewhat harsh, because the submissions had to be run on slower machines than the Linux cluster on which the other submissions were ran. This is a function of programming language/operating system used by the researchers. After running for one week on piece 5 (the longest piece), PLM1 did not produce output, so was assigned mean values over the remaining pieces (Figs. 14 and 15). It was assigned the maximum runtime over the remaining pieces (Fig. 25). The task captain accepts some responsibility for such issues: he should have made the longest piece in the development database far longer than any piece in the test database!

PLM1, DM2, VM1, and VM2 output far fewer patterns than other algorithms (see the n_Q column in Table 4). One potential application of pattern discovery algorithms is for playback accompanied by a visualization of repetitive structure. The relatively few patterns output by these algorithms make them more feasible candidates for this application.

symPoly

Meredith (2016) submitted three algorithms to the symPoly task version, selected according to performance on three-layer F1, precision, and recall metrics, respectively. DM3 outperforms DM2 on establishment recall (, Bonferroni corrected), which is perhaps to be expected because DM3 was submitted by Meredith (2016) on the basis of a recall metric, whereas DM2 was submitted on the basis of a precision metric. Similarly, DM1 outperforms DM2 on establishment recall (, Bonferroni corrected). Again, this is not particularly surprising because DM1 was submitted on the basis of an F1 performance metric (which combines recall and precision), whereas DM2 was submitted on the basis of a precision metric. DM3 is generally higher than DM2 on three-layer recall, and DM2 is mostly higher than DM3 on three-layer precision. Both of these results make sense, given the basis on upon which these algorithms were submitted.

Discussion

To be added post-ISMIR!

I certainly have to step down as Task Captain next year, because I have too many other commitments.

It has already been discussed that next year we may switch to new training and test databases that focus not directly on the discovery task itself, but on an application of pattern discovery that can be used as a proxy to evaluate the extent to which algorithms have retrieved relevant repetitive material. For example, a prediction task. This may also bring interest from deep learners and/or cognitive scientists, looking to build on previous participants' work, which would be great. If you are interested in helping out with the preparation of a new version of the task, you are welcome to get in touch.

Tom Collins, New York, 2016

Results in Detail

symPoly

Figure 2. Establishment recall on a per-pattern basis. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

Figure 3. Occurrence recall on a per-pattern basis. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

Figure 4. Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

Figure 5. Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern?

Figure 6. Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall.

Figure 7. Occurrence recall () averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

Figure 8. Occurrence precision () averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences?

Figure 9. Occurrence F1 () averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall.

Figure 10. Three-layer recall averaged over each piece/movement. Rather than using as a similarity measure (which is the default for establishment recall), three-layer recall uses , which is a kind of F1 measure.

Figure 11. Three-layer precision averaged over each piece/movement. Rather than using as a similarity measure (which is the default for establishment precision), three-layer precision uses , which is a kind of F1 measure.

Figure 12. Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall.

Figure 13. Log runtime of the algorithm for each piece/movement.

symMono

(Submission PLM1 did not complete on piece 5. The task captain took the decision to assign the mean of the evaluation metrics for PLM1 calculated across the remaining pieces. Apart from runtime, in which case the maximum across the remaining pieces was assigned.)

Figure 14. Establishment recall on a per-pattern basis. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

Figure 15. Occurrence recall on a per-pattern basis. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

Figure 16. Establishment recall averaged over each piece/movement. Establishment recall answers the following question. On average, how similar is the most similar algorithm-output pattern to a ground-truth pattern prototype?

Figure 17. Establishment precision averaged over each piece/movement. Establishment precision answers the following question. On average, how similar is the most similar ground-truth pattern prototype to an algorithm-output pattern?

Figure 18. Establishment F1 averaged over each piece/movement. Establishment F1 is an average of establishment precision and establishment recall.

Figure 19. Occurrence recall () averaged over each piece/movement. Occurrence recall answers the following question. On average, how similar is the most similar set of algorithm-output pattern occurrences to a discovered ground-truth occurrence set?

Figure 20. Occurrence precision () averaged over each piece/movement. Occurrence precision answers the following question. On average, how similar is the most similar discovered ground-truth occurrence set to a set of algorithm-output pattern occurrences?

Figure 21. Occurrence F1 () averaged over each piece/movement. Occurrence F1 is an average of occurrence precision and occurrence recall.

Figure 22. Three-layer recall averaged over each piece/movement. Rather than using as a similarity measure (which is the default for establishment recall), three-layer recall uses , which is a kind of F1 measure.

Figure 23. Three-layer precision averaged over each piece/movement. Rather than using as a similarity measure (which is the default for establishment precision), three-layer precision uses , which is a kind of F1 measure.

Figure 24. Three-layer F1 (TLF) averaged over each piece/movement. TLF is an average of three-layer precision and three-layer recall.

Figure 25. Log runtime of the algorithm for each piece/movement.

Tabular Versions of Plots

symPoly

| AlgIdx | AlgStub | Piece | n_P | n_Q | P_est | R_est | F1_est | P_occ(c=.75) | R_occ(c=.75) | F_1occ(c=.75) | P_3 | R_3 | TLF_1 | runtime | FRT | FFTP_est | FFP | P_occ(c=.5) | R_occ(c=.5) | F_1occ(c=.5) | P | R | F_1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | DM1 | piece1 | 5 | 30.000 | 0.328 | 0.431 | 0.372 | 0.000 | 0.000 | 0.000 | 0.215 | 0.326 | 0.259 | 74.000 | 0.000 | 0.366 | 0.330 | 0.284 | 0.430 | 0.342 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece2 | 5 | 63.000 | 0.565 | 0.661 | 0.609 | 0.508 | 0.936 | 0.658 | 0.438 | 0.468 | 0.452 | 727.000 | 0.000 | 0.285 | 0.357 | 0.485 | 0.944 | 0.641 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece3 | 10.000 | 28.000 | 0.703 | 0.685 | 0.694 | 0.520 | 0.784 | 0.625 | 0.620 | 0.536 | 0.575 | 35.000 | 0.000 | 0.447 | 0.688 | 0.496 | 0.749 | 0.597 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece4 | 5 | 19.000 | 0.410 | 0.495 | 0.448 | 0.000 | 0.000 | 0.000 | 0.327 | 0.340 | 0.333 | 2.000 | 0.000 | 0.416 | 0.271 | 0.255 | 0.564 | 0.351 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece5 | 13.000 | 56.000 | 0.766 | 0.612 | 0.680 | 0.543 | 0.941 | 0.688 | 0.634 | 0.467 | 0.538 | 1894.000 | 0.000 | 0.391 | 0.686 | 0.513 | 0.890 | 0.651 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece1 | 5 | 10.000 | 0.353 | 0.291 | 0.319 | 0.000 | 0.000 | 0.000 | 0.242 | 0.174 | 0.202 | 77.000 | 0.000 | 0.291 | 0.268 | 0.424 | 0.156 | 0.228 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece2 | 5 | 10.000 | 0.320 | 0.306 | 0.313 | 0.000 | 0.000 | 0.000 | 0.295 | 0.253 | 0.273 | 710.000 | 0.000 | 0.306 | 0.449 | 0.388 | 0.692 | 0.497 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece3 | 10.000 | 10.000 | 0.777 | 0.497 | 0.606 | 0.710 | 0.802 | 0.753 | 0.737 | 0.450 | 0.559 | 33.000 | 0.000 | 0.435 | 0.711 | 0.651 | 0.775 | 0.708 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece4 | 5 | 10.000 | 0.519 | 0.476 | 0.496 | 0.565 | 0.952 | 0.709 | 0.450 | 0.293 | 0.355 | 1.000 | 0.000 | 0.280 | 0.626 | 0.441 | 0.839 | 0.578 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece5 | 13.000 | 10.000 | 0.857 | 0.397 | 0.543 | 0.614 | 0.913 | 0.734 | 0.717 | 0.358 | 0.478 | 1734.000 | 0.000 | 0.391 | 0.686 | 0.597 | 0.857 | 0.704 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece1 | 5 | 41.000 | 0.357 | 0.528 | 0.426 | 0.406 | 0.439 | 0.422 | 0.257 | 0.422 | 0.319 | 509.000 | 0.000 | 0.498 | 0.439 | 0.369 | 0.516 | 0.430 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece2 | 5 | 71.000 | 0.621 | 0.691 | 0.654 | 0.605 | 0.945 | 0.738 | 0.559 | 0.599 | 0.578 | 2109.000 | 0.000 | 0.290 | 0.305 | 0.533 | 0.908 | 0.672 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece3 | 10.000 | 25.000 | 0.594 | 0.589 | 0.592 | 0.528 | 0.777 | 0.629 | 0.585 | 0.522 | 0.551 | 89.000 | 0.000 | 0.302 | 0.687 | 0.481 | 0.728 | 0.580 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece4 | 5 | 24.000 | 0.465 | 0.488 | 0.476 | 0.355 | 0.765 | 0.485 | 0.352 | 0.337 | 0.344 | 2.000 | 0.000 | 0.415 | 0.583 | 0.279 | 0.617 | 0.384 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece5 | 13.000 | 75.000 | 0.697 | 0.470 | 0.561 | 0.644 | 0.948 | 0.767 | 0.657 | 0.443 | 0.529 | 4765.000 | 0.000 | 0.205 | 0.739 | 0.598 | 0.881 | 0.713 | 0.000 | 0.000 | 0.000 |

Table 2. Tabular version of Figures 4-13.

| AlgIdx | AlgStub | Piece | n_P | R_est | R_occ(c=.75) | R_occ(c=.5) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | DM1 | piece1 | 5 | |||||||||

| 0.882 | 0.900 | 0.500 | 0.450 | 0.867 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 1 | DM1 | piece2 | 5 | |||||||||

| 0.444 | 0.875 | 0.500 | 0.908 | 0.927 | ||||||||

| 0.00000 | 0.802 | 0.000 | 0.908 | 0.923 | ||||||||

| 0.00000 | 0.802 | 0.000 | 0.908 | 0.923 | ||||||||

| 1 | DM1 | piece3 | 10.000 | |||||||||

| 0.455 | 0.605 | 0.767 | 0.364 | 0.500 | 0.250 | 0.045 | 0.562 | 0.417 | 0.333 | |||

| 0.00000 | 0.000 | 0.678 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.678 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 1 | DM1 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.385 | 0.444 | 0.867 | 0.350 | 0.250 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 1 | DM1 | piece5 | 13.000 | |||||||||

| 0.560 | 0.120 | 0.967 | 0.984 | 0.857 | 0.172 | 0.130 | 0.333 | 0.122 | 0.675 | 0.636 | 0.938 | 0.752 |

| 0.00000 | 0.000 | 0.967 | 0.949 | 0.711 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.752 |

| 0.00000 | 0.000 | 0.967 | 0.949 | 0.711 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.752 |

| 2 | DM2 | piece1 | 5 | |||||||||

| 1.00000 | 1.000 | 0.125 | 0.000 | 0.682 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.000 | ||||||||

| 2 | DM2 | piece2 | 5 | |||||||||

| 0.056 | 0.348 | 0.021 | 0.876 | 0.419 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.875 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.875 | 0.000 | ||||||||

| 2 | DM2 | piece3 | 10.000 | |||||||||

| 0.349 | 0.612 | 0.619 | 0.163 | 0.500 | 0.250 | 0.045 | 0.562 | 0.146 | 0.083 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 2 | DM2 | piece4 | 8 | |||||||||

| 0.667 | 0.538 | 0.625 | 0.571 | 0.469 | 0.643 | 0.450 | 0.591 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||||

| 2 | DM2 | piece5 | 13.000 | |||||||||

| 0.094 | 0.092 | 0.350 | 0.273 | 0.254 | 0.122 | 0.051 | 0.069 | 0.084 | 0.462 | 0.243 | 0.938 | 0.515 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.000 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.000 |

| 3 | DM3 | piece1 | 5 | |||||||||

| 0.882 | 0.857 | 0.333 | 0.273 | 0.867 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 3 | DM3 | piece2 | 5 | |||||||||

| 0.800 | 0.438 | 0.333 | 0.672 | 0.920 | ||||||||

| 0.739 | 0.000 | 0.000 | 0.000 | 0.913 | ||||||||

| 0.739 | 0.000 | 0.000 | 0.000 | 0.913 | ||||||||

| 3 | DM3 | piece3 | 10.000 | |||||||||

| 0.600 | 0.674 | 0.722 | 1.000 | 0.579 | 0.355 | 0.065 | 0.795 | 1.000 | 0.667 | |||

| 0.00000 | 0.000 | 0.000 | 0.190 | 0.000 | 0.000 | 0.000 | 0.751 | 0.667 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.190 | 0.000 | 0.000 | 0.000 | 0.751 | 0.667 | 0.000 | |||

| 3 | DM3 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.385 | 0.364 | 0.875 | 0.619 | 0.600 | 0.857 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 3 | DM3 | piece5 | 13.000 | |||||||||

| 0.591 | 0.500 | 0.846 | 0.980 | 0.281 | 0.145 | 0.067 | 0.075 | 0.083 | 0.835 | 0.286 | 0.936 | 0.742 |

| 0.00000 | 0.000 | 0.846 | 0.947 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.504 | 0.000 | 0.924 | 0.000 |

| 0.00000 | 0.000 | 0.846 | 0.947 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.504 | 0.000 | 0.924 | 0.000 |

| 4 | VM1 | piece1 | 5 | |||||||||

| 0.944 | 1.000 | 1.000 | 0.364 | 0.938 | ||||||||

| 0.775 | 0.771 | 0.784 | 0.000 | 0.782 | ||||||||

| 0.775 | 0.771 | 0.784 | 0.000 | 0.782 | ||||||||

| 4 | VM1 | piece2 | 5 | |||||||||

| 0.800 | 0.812 | 0.600 | 0.936 | 0.934 | ||||||||

| 0.614 | 0.734 | 0.000 | 0.936 | 0.934 | ||||||||

| 0.614 | 0.734 | 0.000 | 0.936 | 0.934 | ||||||||

| 4 | VM1 | piece3 | 10.000 | |||||||||

| 0.647 | 0.752 | 0.846 | 1.000 | 0.579 | 0.314 | 0.500 | 0.742 | 1.000 | 1.000 | |||

| 0.00000 | 0.752 | 0.724 | 0.831 | 0.000 | 0.000 | 0.000 | 0.000 | 0.905 | 1.000 | |||

| 0.00000 | 0.752 | 0.724 | 0.831 | 0.000 | 0.000 | 0.000 | 0.000 | 0.905 | 1.000 | |||

| 4 | VM1 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.600 | 1.000 | 1.000 | 1.000 | 0.900 | 1.000 | |||||

| 0.860 | 0.429 | 0.000 | 1.000 | 1.000 | 0.912 | 0.750 | 1.000 | |||||

| 0.860 | 0.429 | 0.000 | 1.000 | 1.000 | 0.912 | 0.750 | 1.000 | |||||

| 4 | VM1 | piece5 | 13.000 | |||||||||

| 0.857 | 0.714 | 0.989 | 0.993 | 0.968 | 0.400 | 0.125 | 0.529 | 0.500 | 0.771 | 0.800 | 0.668 | 0.573 |

| 0.857 | 0.000 | 0.989 | 0.993 | 0.968 | 0.000 | 0.000 | 0.000 | 0.000 | 0.366 | 0.746 | 0.000 | 0.000 |

| 0.857 | 0.000 | 0.989 | 0.993 | 0.968 | 0.000 | 0.000 | 0.000 | 0.000 | 0.366 | 0.746 | 0.000 | 0.000 |

| 5 | VM2 | piece1 | 5 | |||||||||

| 0.727 | 0.719 | 0.625 | 0.111 | 0.667 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 5 | VM2 | piece2 | 5 | |||||||||

| 0.538 | 0.812 | 0.600 | 0.920 | 0.934 | ||||||||

| 0.00000 | 0.734 | 0.000 | 0.920 | 0.934 | ||||||||

| 0.00000 | 0.734 | 0.000 | 0.920 | 0.934 | ||||||||

| 5 | VM2 | piece3 | 10.000 | |||||||||

| 0.786 | 0.744 | 0.962 | 0.278 | 0.395 | 0.360 | 0.250 | 0.677 | 0.571 | 0.190 | |||

| 0.384 | 0.000 | 0.498 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.384 | 0.000 | 0.498 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 5 | VM2 | piece4 | 8 | |||||||||

| 0.667 | 0.571 | 0.600 | 1.000 | 1.000 | 0.714 | 0.800 | 0.769 | |||||

| 0.00000 | 0.000 | 0.000 | 0.911 | 1.000 | 0.000 | 0.733 | 0.769 | |||||

| 0.00000 | 0.000 | 0.000 | 0.911 | 1.000 | 0.000 | 0.733 | 0.769 | |||||

| 5 | VM2 | piece5 | 13.000 | |||||||||

| 0.609 | 0.087 | 0.974 | 0.982 | 0.633 | 0.600 | 0.333 | 0.111 | 0.084 | 0.800 | 0.667 | 0.652 | 0.488 |

| 0.00000 | 0.000 | 0.974 | 0.982 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.388 | 0.000 | 0.000 | 0.000 |

| 0.00000 | 0.000 | 0.974 | 0.982 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.388 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece1 | 5 | |||||||||

| 0.765 | 0.684 | 0.714 | 0.750 | 0.600 | ||||||||

| 0.074 | 0.000 | 0.000 | 0.375 | 0.000 | ||||||||

| 0.074 | 0.000 | 0.000 | 0.375 | 0.000 | ||||||||

| 6 | IR1 | piece2 | 5 | |||||||||

| 0.727 | 0.812 | 0.600 | 0.024 | 0.154 | ||||||||

| 0.00000 | 0.109 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.109 | 0.000 | 0.000 | 0.000 | ||||||||

| 6 | IR1 | piece3 | 10.000 | |||||||||

| 0.647 | 0.062 | 0.742 | 0.833 | 0.237 | 0.474 | 0.250 | 0.839 | 0.857 | 0.750 | |||

| 0.00000 | 0.000 | 0.000 | 0.144 | 0.000 | 0.000 | 0.000 | 0.468 | 0.238 | 0.188 | |||

| 0.00000 | 0.000 | 0.000 | 0.144 | 0.000 | 0.000 | 0.000 | 0.468 | 0.238 | 0.188 | |||

| 6 | IR1 | piece4 | 8 | |||||||||

| 0.600 | 0.429 | 0.000 | 0.800 | 0.133 | 0.143 | 0.778 | 0.692 | |||||

| 0.00000 | 0.000 | 0.000 | 0.177 | 0.000 | 0.000 | 0.389 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.177 | 0.000 | 0.000 | 0.389 | 0.000 | |||||

| 6 | IR1 | piece5 | 13.000 | |||||||||

| 0.500 | 0.625 | 0.015 | 0.081 | 0.333 | 0.364 | 0.700 | 0.889 | 0.800 | 0.359 | 0.652 | 0.134 | 0.181 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.136 | 0.198 | 0.000 | 0.000 | 0.000 | 0.000 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.136 | 0.198 | 0.000 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece1 | 5 | |||||||||

| 0.941 | 0.842 | 0.077 | 0.000 | 0.000 | ||||||||

| 0.309 | 0.265 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.309 | 0.265 | 0.000 | 0.000 | 0.000 | ||||||||

| 7 | PLM1 | piece2 | 5 | |||||||||

| 0.333 | 0.552 | 0.125 | 0.116 | 0.053 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 7 | PLM1 | piece3 | 10.000 | |||||||||

| 0.312 | 0.357 | 0.553 | 0.146 | 0.474 | 0.344 | 0.062 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 7 | PLM1 | piece4 | 8 | |||||||||

| 0.250 | 0.500 | 0.000 | 0.286 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 7 | PLM1 | piece5 | 13.000 | |||||||||

| 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 |

| 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 |

| 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 |

Table 3. Tabular version of Figures 2 and 3.

symMono

| AlgIdx | AlgStub | Piece | n_P | n_Q | P_est | R_est | F1_est | P_occ(c=.75) | R_occ(c=.75) | F_1occ(c=.75) | P_3 | R_3 | TLF_1 | runtime | FRT | FFTP_est | FFP | P_occ(c=.5) | R_occ(c=.5) | F_1occ(c=.5) | P | R | F_1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | DM1 | piece1 | 5 | 44.000 | 0.410 | 0.720 | 0.523 | 0.470 | 0.689 | 0.559 | 0.237 | 0.537 | 0.328 | 152.000 | 0.000 | 0.518 | 0.606 | 0.388 | 0.695 | 0.498 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece2 | 5 | 47.000 | 0.535 | 0.731 | 0.618 | 0.518 | 0.904 | 0.659 | 0.453 | 0.616 | 0.522 | 90.000 | 0.000 | 0.208 | 0.344 | 0.434 | 0.883 | 0.582 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece3 | 10.000 | 13.000 | 0.484 | 0.430 | 0.455 | 0.678 | 0.678 | 0.678 | 0.481 | 0.386 | 0.429 | 2.000 | 0.000 | 0.359 | 0.542 | 0.402 | 0.605 | 0.483 | 0.000 | 0.000 | 0.000 |

| 1 | DM1 | piece4 | 8 | 24.000 | 0.331 | 0.625 | 0.432 | 0.524 | 0.771 | 0.624 | 0.274 | 0.425 | 0.333 | 1.000 | 0.000 | 0.519 | 0.497 | 0.445 | 0.802 | 0.572 | 0.042 | 0.125 | 0.062 |

| 1 | DM1 | piece5 | 13.000 | 72.000 | 0.740 | 0.558 | 0.636 | 0.538 | 0.930 | 0.682 | 0.640 | 0.445 | 0.525 | 688.000 | 0.000 | 0.263 | 0.650 | 0.517 | 0.869 | 0.648 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece1 | 5 | 10.000 | 0.709 | 0.561 | 0.627 | 0.687 | 0.697 | 0.692 | 0.448 | 0.435 | 0.441 | 139.000 | 0.000 | 0.436 | 0.534 | 0.541 | 0.699 | 0.610 | 0.100 | 0.200 | 0.133 |

| 2 | DM2 | piece2 | 5 | 10.000 | 0.395 | 0.344 | 0.368 | 0.524 | 0.875 | 0.656 | 0.375 | 0.277 | 0.319 | 64.000 | 0.000 | 0.208 | 0.344 | 0.350 | 0.875 | 0.500 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece3 | 10.000 | 10.000 | 0.520 | 0.333 | 0.406 | 0.000 | 0.000 | 0.000 | 0.490 | 0.342 | 0.403 | 2.000 | 0.000 | 0.333 | 0.534 | 0.370 | 0.536 | 0.438 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece4 | 8 | 10.000 | 0.290 | 0.569 | 0.384 | 0.000 | 0.000 | 0.000 | 0.205 | 0.237 | 0.220 | 1.000 | 0.000 | 0.197 | 0.225 | 0.166 | 0.405 | 0.236 | 0.000 | 0.000 | 0.000 |

| 2 | DM2 | piece5 | 13.000 | 10.000 | 0.821 | 0.273 | 0.409 | 0.564 | 0.926 | 0.701 | 0.683 | 0.234 | 0.349 | 575.000 | 0.000 | 0.263 | 0.650 | 0.540 | 0.865 | 0.665 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece1 | 5 | 44.000 | 0.364 | 0.642 | 0.465 | 0.518 | 0.717 | 0.602 | 0.224 | 0.520 | 0.313 | 156.000 | 0.000 | 0.518 | 0.606 | 0.404 | 0.736 | 0.521 | 0.000 | 0.000 | 0.000 |

| 3 | DM3 | piece2 | 5 | 47.000 | 0.544 | 0.633 | 0.585 | 0.673 | 0.886 | 0.765 | 0.508 | 0.571 | 0.538 | 234.000 | 0.000 | 0.572 | 0.578 | 0.545 | 0.824 | 0.656 | 0.021 | 0.200 | 0.038 |

| 3 | DM3 | piece3 | 10.000 | 14.000 | 0.633 | 0.646 | 0.639 | 0.700 | 0.536 | 0.607 | 0.534 | 0.523 | 0.528 | 4.000 | 0.000 | 0.294 | 0.588 | 0.502 | 0.565 | 0.531 | 0.071 | 0.100 | 0.083 |

| 3 | DM3 | piece4 | 8 | 26.000 | 0.376 | 0.695 | 0.488 | 0.398 | 0.804 | 0.532 | 0.260 | 0.404 | 0.316 | 2.000 | 0.000 | 0.671 | 0.505 | 0.287 | 0.709 | 0.409 | 0.038 | 0.125 | 0.059 |

| 3 | DM3 | piece5 | 13.000 | 60.000 | 0.618 | 0.490 | 0.547 | 0.570 | 0.866 | 0.688 | 0.589 | 0.414 | 0.486 | 848.000 | 0.000 | 0.311 | 0.705 | 0.528 | 0.806 | 0.638 | 0.000 | 0.000 | 0.000 |

| 4 | VM1 | piece1 | 5 | 7 | 0.557 | 0.849 | 0.673 | 0.360 | 0.777 | 0.492 | 0.344 | 0.362 | 0.353 | 61.569 | 0.000 | 0.849 | 0.482 | 0.372 | 0.777 | 0.503 | 0.000 | 0.000 | 0.000 |

| 4 | VM1 | piece2 | 5 | 7 | 0.412 | 0.817 | 0.548 | 0.486 | 0.805 | 0.606 | 0.320 | 0.430 | 0.367 | 393.263 | 0.000 | 0.472 | 0.074 | 0.399 | 0.764 | 0.524 | 0.286 | 0.400 | 0.333 |

| 4 | VM1 | piece3 | 10.000 | 7 | 0.773 | 0.738 | 0.755 | 0.442 | 0.823 | 0.575 | 0.627 | 0.521 | 0.569 | 12.921 | 0.000 | 0.628 | 0.557 | 0.533 | 0.703 | 0.606 | 0.000 | 0.000 | 0.000 |

| 4 | VM1 | piece4 | 8 | 7 | 0.932 | 0.920 | 0.926 | 0.644 | 0.869 | 0.740 | 0.749 | 0.620 | 0.679 | 66.093 | 0.000 | 0.812 | 0.735 | 0.526 | 0.855 | 0.651 | 0.286 | 0.250 | 0.267 |

| 4 | VM1 | piece5 | 13.000 | 7 | 0.819 | 0.684 | 0.745 | 0.512 | 0.755 | 0.610 | 0.675 | 0.400 | 0.503 | 51.341 | 0.000 | 0.566 | 0.549 | 0.414 | 0.656 | 0.507 | 0.286 | 0.154 | 0.200 |

| 5 | VM2 | piece1 | 5 | 5 | 0.540 | 0.570 | 0.555 | 0.000 | 0.000 | 0.000 | 0.286 | 0.207 | 0.240 | 18.250 | 0.000 | 0.570 | 0.286 | 0.291 | 0.490 | 0.365 | 0.000 | 0.000 | 0.000 |

| 5 | VM2 | piece2 | 5 | 7 | 0.446 | 0.761 | 0.562 | 0.649 | 0.863 | 0.741 | 0.357 | 0.488 | 0.413 | 32.966 | 0.000 | 0.419 | 0.128 | 0.427 | 0.630 | 0.509 | 0.143 | 0.200 | 0.167 |

| 5 | VM2 | piece3 | 10.000 | 7 | 0.690 | 0.521 | 0.594 | 0.865 | 0.441 | 0.584 | 0.609 | 0.471 | 0.531 | 3.580 | 0.000 | 0.393 | 0.541 | 0.662 | 0.561 | 0.607 | 0.000 | 0.000 | 0.000 |

| 5 | VM2 | piece4 | 8 | 6 | 0.842 | 0.765 | 0.802 | 0.579 | 0.837 | 0.684 | 0.732 | 0.504 | 0.597 | 5.863 | 0.000 | 0.721 | 0.711 | 0.410 | 0.732 | 0.525 | 0.167 | 0.125 | 0.143 |

| 5 | VM2 | piece5 | 13.000 | 7 | 0.739 | 0.540 | 0.624 | 0.910 | 0.781 | 0.841 | 0.677 | 0.434 | 0.529 | 37.876 | 0.000 | 0.420 | 0.554 | 0.517 | 0.636 | 0.570 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece1 | 5 | 100.000 | 0.557 | 0.703 | 0.622 | 0.123 | 0.188 | 0.149 | 0.177 | 0.256 | 0.209 | 388.120 | 0.000 | 0.499 | 0.208 | 0.175 | 0.191 | 0.183 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece2 | 5 | 100.000 | 0.685 | 0.464 | 0.553 | 0.074 | 0.109 | 0.088 | 0.093 | 0.105 | 0.099 | 7384.700 | 0.000 | 0.459 | 0.084 | 0.070 | 0.096 | 0.081 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece3 | 10.000 | 100.000 | 0.820 | 0.569 | 0.672 | 0.212 | 0.301 | 0.249 | 0.274 | 0.201 | 0.232 | 52.520 | 0.000 | 0.449 | 0.279 | 0.228 | 0.223 | 0.225 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece4 | 8 | 80.000 | 0.544 | 0.447 | 0.491 | 0.371 | 0.283 | 0.321 | 0.313 | 0.252 | 0.279 | 1.800 | 0.000 | 0.246 | 0.263 | 0.358 | 0.364 | 0.361 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece5 | 13.000 | 100.000 | 0.807 | 0.433 | 0.564 | 0.028 | 0.172 | 0.048 | 0.113 | 0.095 | 0.103 | 37865.940 | 0.000 | 0.433 | 0.105 | 0.063 | 0.135 | 0.086 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece1 | 5 | 4 | 0.839 | 0.372 | 0.515 | 0.860 | 0.295 | 0.439 | 0.399 | 0.190 | 0.258 | 14436.000 | 0.000 | 0.372 | 0.399 | 0.775 | 0.287 | 0.419 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece2 | 5 | 6 | 0.242 | 0.236 | 0.239 | 0.000 | 0.000 | 0.000 | 0.194 | 0.199 | 0.196 | 103741.000 | 0.000 | 0.122 | 0.138 | 0.552 | 0.276 | 0.368 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece3 | 10.000 | 3 | 0.463 | 0.225 | 0.303 | 0.000 | 0.000 | 0.000 | 0.537 | 0.275 | 0.364 | 129.000 | 0.000 | 0.225 | 0.537 | 0.549 | 0.500 | 0.524 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece4 | 8 | 2 | 0.750 | 0.254 | 0.380 | 1.000 | 1.000 | 1.000 | 0.734 | 0.208 | 0.325 | 411.000 | 0.000 | 0.254 | 0.734 | 0.719 | 0.651 | 0.683 | 0.500 | 0.125 | 0.200 |

Table 4. Tabular version of Figures 16-25.

| AlgIdx | AlgStub | Piece | n_P | R_est | R_occ(c=.75) | R_occ(c=.5) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | DM1 | piece1 | 5 | |||||||||

| 0.882 | 0.900 | 0.500 | 0.450 | 0.867 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 1 | DM1 | piece2 | 5 | |||||||||

| 0.444 | 0.875 | 0.500 | 0.908 | 0.927 | ||||||||

| 0.00000 | 0.802 | 0.000 | 0.908 | 0.923 | ||||||||

| 0.00000 | 0.802 | 0.000 | 0.908 | 0.923 | ||||||||

| 1 | DM1 | piece3 | 10.000 | |||||||||

| 0.455 | 0.605 | 0.767 | 0.364 | 0.500 | 0.250 | 0.045 | 0.562 | 0.417 | 0.333 | |||

| 0.00000 | 0.000 | 0.678 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.678 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 1 | DM1 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.385 | 0.444 | 0.867 | 0.350 | 0.250 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 1 | DM1 | piece5 | 13.000 | |||||||||

| 0.560 | 0.120 | 0.967 | 0.984 | 0.857 | 0.172 | 0.130 | 0.333 | 0.122 | 0.675 | 0.636 | 0.938 | 0.752 |

| 0.00000 | 0.000 | 0.967 | 0.949 | 0.711 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.752 |

| 0.00000 | 0.000 | 0.967 | 0.949 | 0.711 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.752 |

| 2 | DM2 | piece1 | 5 | |||||||||

| 1.00000 | 1.000 | 0.125 | 0.000 | 0.682 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.000 | ||||||||

| 2 | DM2 | piece2 | 5 | |||||||||

| 0.056 | 0.348 | 0.021 | 0.876 | 0.419 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.875 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.875 | 0.000 | ||||||||

| 2 | DM2 | piece3 | 10.000 | |||||||||

| 0.349 | 0.612 | 0.619 | 0.163 | 0.500 | 0.250 | 0.045 | 0.562 | 0.146 | 0.083 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 2 | DM2 | piece4 | 8 | |||||||||

| 0.667 | 0.538 | 0.625 | 0.571 | 0.469 | 0.643 | 0.450 | 0.591 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||||

| 2 | DM2 | piece5 | 13.000 | |||||||||

| 0.094 | 0.092 | 0.350 | 0.273 | 0.254 | 0.122 | 0.051 | 0.069 | 0.084 | 0.462 | 0.243 | 0.938 | 0.515 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.000 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.926 | 0.000 |

| 3 | DM3 | piece1 | 5 | |||||||||

| 0.882 | 0.857 | 0.333 | 0.273 | 0.867 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 0.866 | 0.613 | 0.000 | 0.000 | 0.778 | ||||||||

| 3 | DM3 | piece2 | 5 | |||||||||

| 0.800 | 0.438 | 0.333 | 0.672 | 0.920 | ||||||||

| 0.739 | 0.000 | 0.000 | 0.000 | 0.913 | ||||||||

| 0.739 | 0.000 | 0.000 | 0.000 | 0.913 | ||||||||

| 3 | DM3 | piece3 | 10.000 | |||||||||

| 0.600 | 0.674 | 0.722 | 1.000 | 0.579 | 0.355 | 0.065 | 0.795 | 1.000 | 0.667 | |||

| 0.00000 | 0.000 | 0.000 | 0.190 | 0.000 | 0.000 | 0.000 | 0.751 | 0.667 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.190 | 0.000 | 0.000 | 0.000 | 0.751 | 0.667 | 0.000 | |||

| 3 | DM3 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.385 | 0.364 | 0.875 | 0.619 | 0.600 | 0.857 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 0.750 | 0.643 | 0.000 | 0.000 | 0.867 | 0.000 | 0.000 | 0.846 | |||||

| 3 | DM3 | piece5 | 13.000 | |||||||||

| 0.591 | 0.500 | 0.846 | 0.980 | 0.281 | 0.145 | 0.067 | 0.075 | 0.083 | 0.835 | 0.286 | 0.936 | 0.742 |

| 0.00000 | 0.000 | 0.846 | 0.947 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.504 | 0.000 | 0.924 | 0.000 |

| 0.00000 | 0.000 | 0.846 | 0.947 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.504 | 0.000 | 0.924 | 0.000 |

| 4 | VM1 | piece1 | 5 | |||||||||

| 0.944 | 1.000 | 1.000 | 0.364 | 0.938 | ||||||||

| 0.775 | 0.771 | 0.784 | 0.000 | 0.782 | ||||||||

| 0.775 | 0.771 | 0.784 | 0.000 | 0.782 | ||||||||

| 4 | VM1 | piece2 | 5 | |||||||||

| 0.800 | 0.812 | 0.600 | 0.936 | 0.934 | ||||||||

| 0.614 | 0.734 | 0.000 | 0.936 | 0.934 | ||||||||

| 0.614 | 0.734 | 0.000 | 0.936 | 0.934 | ||||||||

| 4 | VM1 | piece3 | 10.000 | |||||||||

| 0.647 | 0.752 | 0.846 | 1.000 | 0.579 | 0.314 | 0.500 | 0.742 | 1.000 | 1.000 | |||

| 0.00000 | 0.752 | 0.724 | 0.831 | 0.000 | 0.000 | 0.000 | 0.000 | 0.905 | 1.000 | |||

| 0.00000 | 0.752 | 0.724 | 0.831 | 0.000 | 0.000 | 0.000 | 0.000 | 0.905 | 1.000 | |||

| 4 | VM1 | piece4 | 8 | |||||||||

| 1.00000 | 0.857 | 0.600 | 1.000 | 1.000 | 1.000 | 0.900 | 1.000 | |||||

| 0.860 | 0.429 | 0.000 | 1.000 | 1.000 | 0.912 | 0.750 | 1.000 | |||||

| 0.860 | 0.429 | 0.000 | 1.000 | 1.000 | 0.912 | 0.750 | 1.000 | |||||

| 4 | VM1 | piece5 | 13.000 | |||||||||

| 0.857 | 0.714 | 0.989 | 0.993 | 0.968 | 0.400 | 0.125 | 0.529 | 0.500 | 0.771 | 0.800 | 0.668 | 0.573 |

| 0.857 | 0.000 | 0.989 | 0.993 | 0.968 | 0.000 | 0.000 | 0.000 | 0.000 | 0.366 | 0.746 | 0.000 | 0.000 |

| 0.857 | 0.000 | 0.989 | 0.993 | 0.968 | 0.000 | 0.000 | 0.000 | 0.000 | 0.366 | 0.746 | 0.000 | 0.000 |

| 5 | VM2 | piece1 | 5 | |||||||||

| 0.727 | 0.719 | 0.625 | 0.111 | 0.667 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 5 | VM2 | piece2 | 5 | |||||||||

| 0.538 | 0.812 | 0.600 | 0.920 | 0.934 | ||||||||

| 0.00000 | 0.734 | 0.000 | 0.920 | 0.934 | ||||||||

| 0.00000 | 0.734 | 0.000 | 0.920 | 0.934 | ||||||||

| 5 | VM2 | piece3 | 10.000 | |||||||||

| 0.786 | 0.744 | 0.962 | 0.278 | 0.395 | 0.360 | 0.250 | 0.677 | 0.571 | 0.190 | |||

| 0.384 | 0.000 | 0.498 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.384 | 0.000 | 0.498 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 5 | VM2 | piece4 | 8 | |||||||||

| 0.667 | 0.571 | 0.600 | 1.000 | 1.000 | 0.714 | 0.800 | 0.769 | |||||

| 0.00000 | 0.000 | 0.000 | 0.911 | 1.000 | 0.000 | 0.733 | 0.769 | |||||

| 0.00000 | 0.000 | 0.000 | 0.911 | 1.000 | 0.000 | 0.733 | 0.769 | |||||

| 5 | VM2 | piece5 | 13.000 | |||||||||

| 0.609 | 0.087 | 0.974 | 0.982 | 0.633 | 0.600 | 0.333 | 0.111 | 0.084 | 0.800 | 0.667 | 0.652 | 0.488 |

| 0.00000 | 0.000 | 0.974 | 0.982 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.388 | 0.000 | 0.000 | 0.000 |

| 0.00000 | 0.000 | 0.974 | 0.982 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.388 | 0.000 | 0.000 | 0.000 |

| 6 | IR1 | piece1 | 5 | |||||||||

| 0.765 | 0.684 | 0.714 | 0.750 | 0.600 | ||||||||

| 0.074 | 0.000 | 0.000 | 0.375 | 0.000 | ||||||||

| 0.074 | 0.000 | 0.000 | 0.375 | 0.000 | ||||||||

| 6 | IR1 | piece2 | 5 | |||||||||

| 0.727 | 0.812 | 0.600 | 0.024 | 0.154 | ||||||||

| 0.00000 | 0.109 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.109 | 0.000 | 0.000 | 0.000 | ||||||||

| 6 | IR1 | piece3 | 10.000 | |||||||||

| 0.647 | 0.062 | 0.742 | 0.833 | 0.237 | 0.474 | 0.250 | 0.839 | 0.857 | 0.750 | |||

| 0.00000 | 0.000 | 0.000 | 0.144 | 0.000 | 0.000 | 0.000 | 0.468 | 0.238 | 0.188 | |||

| 0.00000 | 0.000 | 0.000 | 0.144 | 0.000 | 0.000 | 0.000 | 0.468 | 0.238 | 0.188 | |||

| 6 | IR1 | piece4 | 8 | |||||||||

| 0.600 | 0.429 | 0.000 | 0.800 | 0.133 | 0.143 | 0.778 | 0.692 | |||||

| 0.00000 | 0.000 | 0.000 | 0.177 | 0.000 | 0.000 | 0.389 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.177 | 0.000 | 0.000 | 0.389 | 0.000 | |||||

| 6 | IR1 | piece5 | 13.000 | |||||||||

| 0.500 | 0.625 | 0.015 | 0.081 | 0.333 | 0.364 | 0.700 | 0.889 | 0.800 | 0.359 | 0.652 | 0.134 | 0.181 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.136 | 0.198 | 0.000 | 0.000 | 0.000 | 0.000 |

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.136 | 0.198 | 0.000 | 0.000 | 0.000 | 0.000 |

| 7 | PLM1 | piece1 | 5 | |||||||||

| 0.941 | 0.842 | 0.077 | 0.000 | 0.000 | ||||||||

| 0.309 | 0.265 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.309 | 0.265 | 0.000 | 0.000 | 0.000 | ||||||||

| 7 | PLM1 | piece2 | 5 | |||||||||

| 0.333 | 0.552 | 0.125 | 0.116 | 0.053 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | ||||||||

| 7 | PLM1 | piece3 | 10.000 | |||||||||

| 0.312 | 0.357 | 0.553 | 0.146 | 0.474 | 0.344 | 0.062 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |||

| 7 | PLM1 | piece4 | 8 | |||||||||

| 0.250 | 0.500 | 0.000 | 0.286 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 0.00000 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | |||||

| 7 | PLM1 | piece5 | 13.000 | |||||||||

| 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 | 0.263 |

| 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 |

| 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 | 0.054 |

Table 5. Tabular version of Figures 14 and 15.

References

- Harold Barlow and Sam Morgenstern. (1948). A dictionary of musical themes. Crown Publishers, New York.

- Siglind Bruhn. (1993). J.S. Bach's Well-Tempered Clavier: in-depth analysis and interpretation. Mainer International, Hong Kong.

- Tom Collins, Sebastian Böck, Florian Krebs, and Gerhard Widmer. (2014). Bridging the audio-symbolic gap: the discovery of repeated note content directly from polyphonic music audio. In Proc. Audio Engineering Society's 53rd Conference on Semantic Audio, London, UK.

- William Drabkin. Motif. (2001). In S. Sadie and J. Tyrrell (Eds), The new Grove dictionary of music and musicians. Macmillan, London, UK, 2nd ed.

- Olivier Lartillot. (2014a). Submission to MIREX Discovery of Repeated Themes and Sections. 10th Annual Music Information Retrieval eXchange (MIREX'14), Taipei, Taiwan.

- Olivier Lartillot. (2014b). In-depth motivic analysis based on multiparametric closed pattern and cyclic sequence mining. In Proc. ISMIR, Taipei, Taiwan.

- Brian McFee, Oriol Nieto, Juan Pablo Bello. (2015). Hierarchical evaluation of segment boundary detection. In Proc. ISMIR (pp. 406-12), Malaga, Spain.

- Oriol Nieto and Morwaread Farbood. (2014a). Submission to MIREX discovery of repeated themes and sections. 10th Annual Music Information Retrieval eXchange (MIREX'14), Taipei, Taiwan.

- Oriol Nieto and Morwaread Farbood. (2014b). Identifying polyphonic musical patterns from audio recordings using music segmentation techniques. In Proc. ISMIR, Taipei, Taiwan.

- Oriol Nieto and Morwaread Farbood. (2013). Discovering musical patterns using audio structural segmentation techniques. 9th Annual Music Information Retrieval eXchange (MIREX'13), Curitiba, Brazil.

- Matevz Pesek, Ales Leonardis, and Matija Marolt. (2015). Submission to MIREX Discovery of Repeated Themes and Sections. 11th Annual Music Information Retrieval eXchange (MIREX'15), Malaga, Spain.

- Matevz Pesek, Ales Leonardis, and Matija Marolt. (2014). A compositional hierarchical model for music information retrieval. In Proc. ISMIR (pp. 131-136), Taipei, Taiwan.

- Arnold Schoenberg. (1967). Fundamentals of Musical Composition. Faber and Faber, London.

- Gissel Velarde and David Meredith. (2014). Submission to MIREX Discovery of Repeated Themes and Sections. 10th Annual Music Information Retrieval eXchange (MIREX'14), Taipei, Taiwan.

- Cheng-i Wang, Jennifer Hsu, and Shlomo Dubnov. (2015a). Submission to MIREX Discovery of Repeated Themes and Sections. 11th Annual Music Information Retrieval eXchange (MIREX'15), Malaga, Spain.

- Cheng-i Wang, Jennifer Hsu, and Shlomo Dubnov. (2015b). Music pattern discovery with variable Markov oracle: a unified approach to symbolic and audio representations. In Proc. ISMIR (pp. 176-182), Malaga, Spain.

- Ron J. Weiss and Juan Pablo Bello. (2010). Identifying repeated patterns in music using sparse convolutive non-negative matrix factorization. In Proc. ISMIR (pp. 123-128), Utrecht, The Netherlands.