2011:Audio Cover Song Identification Results

Contents

Introduction

This year, we ran Audio Cover Song (ACS) Identfication with two datasets: Mixed Collection and Sapp's Mazurka Collection.

Mixed Collection Information

This is the "original" ACS collection. Within the 1000 pieces in the Audio Cover Song database, there are embedded 30 different "cover songs" each represented by 11 different "versions" for a total of 330 audio files (16bit, monophonic, 22.05khz, wav). The "cover songs" represent a variety of genres (e.g., classical, jazz, gospel, rock, folk-rock, etc.) and the variations span a variety of styles and orchestrations.

Using each of these cover song files in turn as as the "seed/query" file, we will examine the returned lists of items for the presence of the other 10 versions of the "seed/query" file.

Sapp's Mazurka Collection Information

In addition to our original ACS dataset, we used the Mazurka.org dataset put together by Craig Sapp. We randomly chose 11 versions from 49 mazurkas and ran it as a separate ACS subtask. Systems returned a distance matrix of 539x539 from which we located the ranks of each of the associated cover versions.

General Legend

| Sub code | Submission name | Abstract | Contributors |

|---|---|---|---|

| ALL1 | tonalspacecoversys.m | Teppo E. Ahonen, Kjell Lemström, Simo Linkola | |

| CWWJ1 | CWWJ | Chuan-Yau Chan, Ju-Chiang Wang, Hsin-Min Wang,Shyh-Kang Jeng |

Results

Mixed Collection

Summary Results

| ALL1 | CWWJ1 | |

|---|---|---|

| Total number of covers identified in top 10 | 442.00 | 625.00 |

| Mean number of covers identified in top 10 (average performance) | 1.34 | 1.89 |

| Mean (arithmetic) of Avg. Precisions | 0.13 | 0.21 |

| Mean rank of first correctly identified cover | 23.92 | 29.99 |

Number of Correct Covers at Rank X Returned in Top Ten

| Rank | ALL1 | CWWJ1 |

|---|---|---|

| 1 | 85 | 98 |

| 2 | 60 | 83 |

| 3 | 44 | 77 |

| 4 | 47 | 75 |

| 5 | 40 | 58 |

| 6 | 43 | 56 |

| 7 | 31 | 52 |

| 8 | 34 | 45 |

| 9 | 32 | 42 |

| 10 | 26 | 39 |

| Total | 442 | 625 |

Average Performance per Query Group

| Group | ALL1 | CWWJ1 |

|---|---|---|

| 1 | 0.34 | 0.82 |

| 2 | 0.02 | 0.01 |

| 3 | 0.17 | 0.14 |

| 4 | 0.13 | 0.20 |

| 5 | 0.03 | 0.07 |

| 6 | 0.30 | 0.11 |

| 7 | 0.15 | 0.04 |

| 8 | 0.04 | 0.01 |

| 9 | 0.04 | 0.03 |

| 10 | 0.05 | 0.22 |

| 11 | 0.23 | 0.02 |

| 12 | 0.10 | 0.48 |

| 13 | 0.33 | 0.30 |

| 14 | 0.03 | 0.03 |

| 15 | 0.18 | 0.28 |

| 16 | 0.04 | 0.03 |

| 17 | 0.08 | 0.12 |

| 18 | 0.03 | 0.39 |

| 19 | 0.01 | 0.03 |

| 20 | 0.18 | 0.05 |

| 21 | 0.12 | 0.14 |

| 22 | 0.03 | 0.10 |

| 23 | 0.01 | 0.09 |

| 24 | 0.13 | 0.26 |

| 25 | 0.02 | 0.03 |

| 26 | 0.05 | 0.24 |

| 27 | 0.09 | 0.63 |

| 28 | 0.02 | 0.01 |

| 29 | 0.68 | 0.38 |

| 30 | 0.20 | 0.69 |

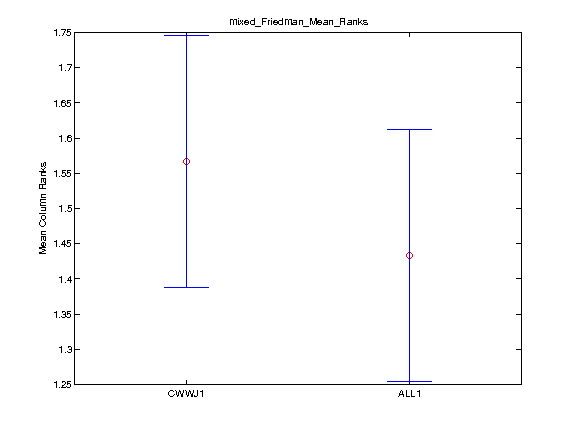

Friedman's Test for Significant Differences

The Friedman test was run in MATLAB against the Average Precision summary data over the 30 song groups.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| CWWJ1 | ALL1 | -0.22 | 0.13 | 0.49 | FALSE |

Run Times

TBA

Sapp's Mazuraka Collection

Summary results

| ALL1 | CWWJ1 | |

|---|---|---|

| Total number of covers identified in top 10 | 3462.00 | 4869.00 |

| Mean number of covers identified in top 10 (average performance) | 6.42 | 9.03 |

| Mean (arithmetic) of Avg. Precisions | 0.70 | 0.88 |

| Mean rank of first correctly identified cover | 1.76 | 1.82 |

Number of Correct Covers at Rank X Returned in Top Ten

| Rank | ALL1 | CWWJ1 |

|---|---|---|

| 1 | 491 | 373 |

| 2 | 469 | 491 |

| 3 | 447 | 509 |

| 4 | 418 | 508 |

| 5 | 381 | 516 |

| 6 | 342 | 513 |

| 7 | 296 | 511 |

| 8 | 255 | 502 |

| 9 | 217 | 487 |

| 10 | 146 | 459 |

| Total | 3462 | 4869 |

Average Performance per Query Group

| Group | ALL1 | CWWJ1 |

|---|---|---|

| 1 | 0.11 | 0.95 |

| 2 | 0.90 | 1 |

| 3 | 0.80 | 0.88 |

| 4 | 0.97 | 0.91 |

| 5 | 0.67 | 1 |

| 6 | 0.46 | 1 |

| 7 | 0.47 | 1 |

| 8 | 0.84 | 1 |

| 9 | 0.99 | 1 |

| 10 | 0.92 | 0.80 |

| 11 | 0.85 | 1 |

| 12 | 0.87 | 0.89 |

| 13 | 0.89 | 0.93 |

| 14 | 0.24 | 0.80 |

| 15 | 0.90 | 1 |

| 16 | 0.71 | 0.77 |

| 17 | 0.82 | 1 |

| 18 | 0.83 | 0.91 |

| 19 | 0.62 | 0.96 |

| 20 | 0.92 | 1 |

| 21 | 0.21 | 0.91 |

| 22 | 0.98 | 1 |

| 23 | 0.63 | 1 |

| 24 | 0.88 | 0.92 |

| 25 | 0.14 | 0.79 |

| 26 | 0.67 | 0.71 |

| 27 | 0.48 | 0.89 |

| 28 | 0.15 | 0.30 |

| 29 | 0.08 | 0.05 |

| 30 | 0.61 | 0.96 |

| 31 | 0.73 | 1.00 |

| 32 | 0.95 | 0.65 |

| 33 | 0.92 | 1 |

| 34 | 0.35 | 1 |

| 35 | 1.00 | 1 |

| 36 | 0.85 | 0.89 |

| 37 | 0.57 | 0.95 |

| 38 | 0.13 | 1 |

| 39 | 0.71 | 0.84 |

| 40 | 0.73 | 1 |

| 41 | 0.74 | 0.95 |

| 42 | 0.69 | 0.94 |

| 43 | 0.80 | 0.97 |

| 44 | 0.72 | 1 |

| 45 | 0.74 | 0.74 |

| 46 | 0.91 | 0.99 |

| 47 | 0.68 | 0.77 |

| 48 | 0.85 | 0.86 |

| 49 | 0.73 | 0.82 |

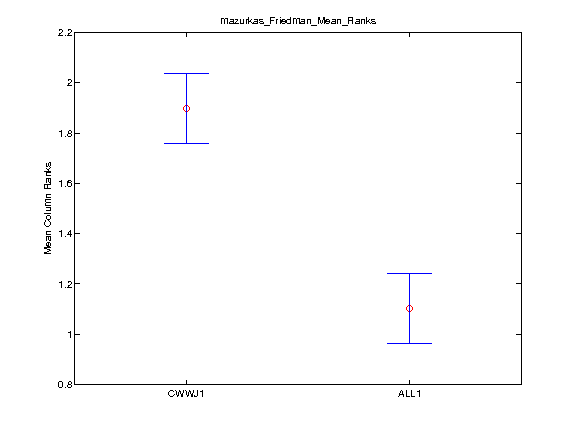

Friedman's Test for Significant Differences

The Friedman test was run in MATLAB against the Average Precision summary data over the 30 song groups.

Command: [c,m,h,gnames] = multcompare(stats, 'ctype', 'tukey-kramer','estimate', 'friedman', 'alpha', 0.05);

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| CWWJ1 | ALL1 | 0.52 | 0.80 | 1.08 | TRUE |

Run Times

TBA

Individual Results Files

Mixed Collection

Average Precision by Query

ALL1 : Teppo E. Ahonen, Kjell Lemström, Simo Linkola

CWWJ1 : Chuan-Yau Chan, Ju-Chiang Wang, Hsin-Min Wang,Shyh-Kang Jeng

Rank Lists

ALL1 : Teppo E. Ahonen, Kjell Lemström, Simo Linkola

CWWJ1 : Chuan-Yau Chan, Ju-Chiang Wang, Hsin-Min Wang,Shyh-Kang Jeng

Sapp's Mazurka Collection

Average Precision by Query

ALL1 : Teppo E. Ahonen, Kjell Lemström, Simo Linkola

CWWJ1 : Chuan-Yau Chan, Ju-Chiang Wang, Hsin-Min Wang,Shyh-Kang Jeng

Rank Lists

ALL1 : Teppo E. Ahonen, Kjell Lemström, Simo Linkola

CWWJ1 : Chuan-Yau Chan, Ju-Chiang Wang, Hsin-Min Wang,Shyh-Kang Jeng