2010:Multiple Fundamental Frequency Estimation & Tracking Results

Contents

Introduction

These are the results for the 2008 running of the Multiple Fundamental Frequency Estimation and Tracking task. For background information about this task set please refer to the 2010:Multiple Fundamental Frequency Estimation & Tracking page.

General Legend

Task 1: Multiple Fundamental Frequency Estimation (MF0E)

MF0E Overall Summary Results

Below are the average scores across 40 test files. These files come from 3 different sources: woodwind quintet recording of bassoon, clarinet, horn,flute and oboe (UIUC); Rendered MIDI using RWC database donated by IRCAM and a quartet recording of bassoon, clarinet, violin and sax donated by Dr. Bryan Pardo`s Interactive Audio Lab (IAL). 20 files coming from 5 sections of the woodwind recording where each section has 4 files ranging from 2 polyphony to 5 polyphony. 12 files from IAL, coming from 4 different songs ranging from 2 polyphony to 4 polyphony and 8 files from RWC synthesized midi ranging from 2 different songs ranging from 2 polphony to 5 polyphony.

| AR1 | AR2 | AR3 | AR4 | BD1 | CRVRC1 | CRVRC3 | DCL1 | DHP1 | JW1 | JW2 | LYLC1 | NNTOS1 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Accuracy | 0.654 | 0.655 | 0.692 | 0.692 | 0.468 | 0.49 | 0.457 | 0.457 | 0.553 | 0.354 | 0.361 | 0.373 | 0.06 |

| Accuracy Chroma | 0.68 | 0.681 | 0.71 | 0.71 | 0.545 | 0.544 | 0.52 | 0.524 | 0.594 | 0.459 | 0.473 | 0.457 | 0.109 |

Detailed Results

| Precision | Recall | Accuracy | Etot | Esubs | Emiss | Efa | |

|---|---|---|---|---|---|---|---|

| AR1 | 0.721 | 0.799 | 0.654 | 0.448 | 0.096 | 0.105 | 0.247 |

| AR2 | 0.722 | 0.799 | 0.655 | 0.446 | 0.095 | 0.106 | 0.245 |

| AR3 | 0.752 | 0.829 | 0.692 | 0.391 | 0.084 | 0.088 | 0.219 |

| AR4 | 0.753 | 0.828 | 0.692 | 0.390 | 0.084 | 0.088 | 0.218 |

| BD1 | 0.716 | 0.485 | 0.468 | 0.558 | 0.167 | 0.349 | 0.043 |

| CRVRC1 | 0.638 | 0.556 | 0.490 | 0.598 | 0.198 | 0.246 | 0.154 |

| CRVRC3 | 0.515 | 0.637 | 0.457 | 0.837 | 0.227 | 0.136 | 0.474 |

| DCL1 | 0.499 | 0.676 | 0.457 | 0.847 | 0.206 | 0.119 | 0.523 |

| DHP1 | 0.712 | 0.632 | 0.553 | 0.518 | 0.129 | 0.239 | 0.150 |

| JW1 | 0.369 | 0.579 | 0.354 | 1.146 | 0.361 | 0.060 | 0.725 |

| JW2 | 0.384 | 0.541 | 0.361 | 1.033 | 0.382 | 0.077 | 0.575 |

| LYLC1 | 0.584 | 0.399 | 0.373 | 0.681 | 0.228 | 0.373 | 0.080 |

| NNTOS1 | 0.142 | 0.061 | 0.060 | 0.967 | 0.277 | 0.661 | 0.028 |

Detailed Chroma Results

Here, accuracy is assessed on chroma results (i.e. all F0's are mapped to a single octave before evaluating)

| Precision | Recall | Accuracy | Etot | Esubs | Emiss | Efa | |

|---|---|---|---|---|---|---|---|

| AR1 | 0.750 | 0.833 | 0.680 | 0.415 | 0.063 | 0.105 | 0.247 |

| AR2 | 0.752 | 0.832 | 0.681 | 0.413 | 0.063 | 0.106 | 0.245 |

| AR3 | 0.772 | 0.851 | 0.710 | 0.368 | 0.061 | 0.088 | 0.219 |

| AR4 | 0.772 | 0.851 | 0.710 | 0.367 | 0.061 | 0.088 | 0.218 |

| BD1 | 0.830 | 0.567 | 0.545 | 0.476 | 0.085 | 0.349 | 0.043 |

| CRVRC1 | 0.707 | 0.621 | 0.544 | 0.533 | 0.134 | 0.246 | 0.154 |

| CRVRC3 | 0.587 | 0.732 | 0.520 | 0.742 | 0.132 | 0.136 | 0.474 |

| DCL1 | 0.574 | 0.775 | 0.524 | 0.748 | 0.107 | 0.119 | 0.523 |

| DHP1 | 0.766 | 0.678 | 0.594 | 0.471 | 0.082 | 0.239 | 0.150 |

| JW1 | 0.477 | 0.767 | 0.459 | 0.958 | 0.172 | 0.060 | 0.725 |

| JW2 | 0.501 | 0.726 | 0.473 | 0.849 | 0.197 | 0.077 | 0.575 |

| LYLC1 | 0.715 | 0.490 | 0.457 | 0.590 | 0.137 | 0.373 | 0.080 |

| NNTOS1 | 0.303 | 0.112 | 0.109 | 0.916 | 0.227 | 0.661 | 0.028 |

Individual Results Files for Task 1

AR1 = Chunghsin Yeh, Axel Roebel

AR2 = Chunghsin Yeh, Axel Roebel

AR3 = Chunghsin Yeh, Axel Roebel

AR4 = Chunghsin Yeh, Axel Roebel

BD1 = Emmanouil Benetos, Simon Dixon

CRCRV1 = F. Quesada et. al.

CRCRV3 = F. Quesada et. al.

DCL1 = Arnaud Dessein, Arshia Cont, Guillaume Lemaitre

DHP1 = Zhiyao Duan, Jinyu Han, Bryan Pardo

JW1 = Jun Wu et. al.

JW2 = Jun Wu et. al.

LYLC1 = C. T. Lee, et. al.

NNTOS1 = M. Nakano, et. al.

Info about the filenames

The filenames starting with part* comes from acoustic woodwind recording, the ones starting with RWC are synthesized. The legend about the instruments are:

bs = bassoon, cl = clarinet, fl = flute, hn = horn, ob = oboe, vl = violin, cel = cello, gtr = guitar, sax = saxophone, bass = electric bass guitar

Run Times

file /nema-raid/www/mirex/results/2009/multif0/task1_runtimes.csv not found

TBA

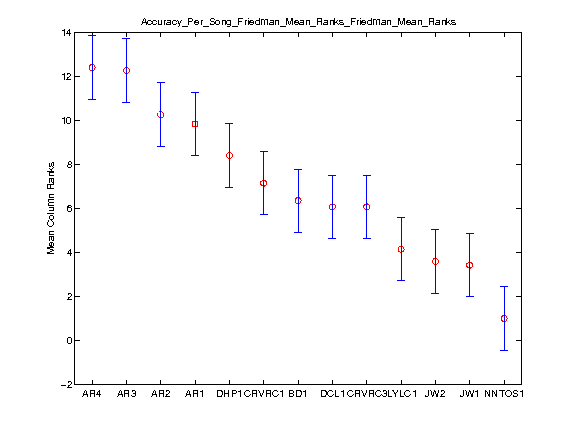

Friedman tests for Multiple Fundamental Frequency Estimation (MF0E)

The Friedman test was run in MATLAB to test significant differences amongst systems with regard to the performance (accuracy) on individual files.

Tukey-Kramer HSD Multi-Comparison

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| AR4 | AR3 | -2.7597 | 0.1250 | 3.0097 | FALSE |

| AR4 | AR2 | -0.7472 | 2.1375 | 5.0222 | FALSE |

| AR4 | AR1 | -0.3222 | 2.5625 | 5.4472 | FALSE |

| AR4 | DHP1 | 1.1153 | 4.0000 | 6.8847 | TRUE |

| AR4 | CRVRC1 | 2.3653 | 5.2500 | 8.1347 | TRUE |

| AR4 | BD1 | 3.1653 | 6.0500 | 8.9347 | TRUE |

| AR4 | DCL1 | 3.4403 | 6.3250 | 9.2097 | TRUE |

| AR4 | CRVRC3 | 3.4403 | 6.3250 | 9.2097 | TRUE |

| AR4 | LYLC1 | 5.3653 | 8.2500 | 11.1347 | TRUE |

| AR4 | JW2 | 5.9153 | 8.8000 | 11.6847 | TRUE |

| AR4 | JW1 | 6.0903 | 8.9750 | 11.8597 | TRUE |

| AR4 | NNTOS1 | 8.5153 | 11.4000 | 14.2847 | TRUE |

| AR3 | AR2 | -0.8722 | 2.0125 | 4.8972 | FALSE |

| AR3 | AR1 | -0.4472 | 2.4375 | 5.3222 | FALSE |

| AR3 | DHP1 | 0.9903 | 3.8750 | 6.7597 | TRUE |

| AR3 | CRVRC1 | 2.2403 | 5.1250 | 8.0097 | TRUE |

| AR3 | BD1 | 3.0403 | 5.9250 | 8.8097 | TRUE |

| AR3 | DCL1 | 3.3153 | 6.2000 | 9.0847 | TRUE |

| AR3 | CRVRC3 | 3.3153 | 6.2000 | 9.0847 | TRUE |

| AR3 | LYLC1 | 5.2403 | 8.1250 | 11.0097 | TRUE |

| AR3 | JW2 | 5.7903 | 8.6750 | 11.5597 | TRUE |

| AR3 | JW1 | 5.9653 | 8.8500 | 11.7347 | TRUE |

| AR3 | NNTOS1 | 8.3903 | 11.2750 | 14.1597 | TRUE |

| AR2 | AR1 | -2.4597 | 0.4250 | 3.3097 | FALSE |

| AR2 | DHP1 | -1.0222 | 1.8625 | 4.7472 | FALSE |

| AR2 | CRVRC1 | 0.2278 | 3.1125 | 5.9972 | TRUE |

| AR2 | BD1 | 1.0278 | 3.9125 | 6.7972 | TRUE |

| AR2 | DCL1 | 1.3028 | 4.1875 | 7.0722 | TRUE |

| AR2 | CRVRC3 | 1.3028 | 4.1875 | 7.0722 | TRUE |

| AR2 | LYLC1 | 3.2278 | 6.1125 | 8.9972 | TRUE |

| AR2 | JW2 | 3.7778 | 6.6625 | 9.5472 | TRUE |

| AR2 | JW1 | 3.9528 | 6.8375 | 9.7222 | TRUE |

| AR2 | NNTOS1 | 6.3778 | 9.2625 | 12.1472 | TRUE |

| AR1 | DHP1 | -1.4472 | 1.4375 | 4.3222 | FALSE |

| AR1 | CRVRC1 | -0.1972 | 2.6875 | 5.5722 | FALSE |

| AR1 | BD1 | 0.6028 | 3.4875 | 6.3722 | TRUE |

| AR1 | DCL1 | 0.8778 | 3.7625 | 6.6472 | TRUE |

| AR1 | CRVRC3 | 0.8778 | 3.7625 | 6.6472 | TRUE |

| AR1 | LYLC1 | 2.8028 | 5.6875 | 8.5722 | TRUE |

| AR1 | JW2 | 3.3528 | 6.2375 | 9.1222 | TRUE |

| AR1 | JW1 | 3.5278 | 6.4125 | 9.2972 | TRUE |

| AR1 | NNTOS1 | 5.9528 | 8.8375 | 11.7222 | TRUE |

| DHP1 | CRVRC1 | -1.6347 | 1.2500 | 4.1347 | FALSE |

| DHP1 | BD1 | -0.8347 | 2.0500 | 4.9347 | FALSE |

| DHP1 | DCL1 | -0.5597 | 2.3250 | 5.2097 | FALSE |

| DHP1 | CRVRC3 | -0.5597 | 2.3250 | 5.2097 | FALSE |

| DHP1 | LYLC1 | 1.3653 | 4.2500 | 7.1347 | TRUE |

| DHP1 | JW2 | 1.9153 | 4.8000 | 7.6847 | TRUE |

| DHP1 | JW1 | 2.0903 | 4.9750 | 7.8597 | TRUE |

| DHP1 | NNTOS1 | 4.5153 | 7.4000 | 10.2847 | TRUE |

| CRVRC1 | BD1 | -2.0847 | 0.8000 | 3.6847 | FALSE |

| CRVRC1 | DCL1 | -1.8097 | 1.0750 | 3.9597 | FALSE |

| CRVRC1 | CRVRC3 | -1.8097 | 1.0750 | 3.9597 | FALSE |

| CRVRC1 | LYLC1 | 0.1153 | 3.0000 | 5.8847 | TRUE |

| CRVRC1 | JW2 | 0.6653 | 3.5500 | 6.4347 | TRUE |

| CRVRC1 | JW1 | 0.8403 | 3.7250 | 6.6097 | TRUE |

| CRVRC1 | NNTOS1 | 3.2653 | 6.1500 | 9.0347 | TRUE |

| BD1 | DCL1 | -2.6097 | 0.2750 | 3.1597 | FALSE |

| BD1 | CRVRC3 | -2.6097 | 0.2750 | 3.1597 | FALSE |

| BD1 | LYLC1 | -0.6847 | 2.2000 | 5.0847 | FALSE |

| BD1 | JW2 | -0.1347 | 2.7500 | 5.6347 | FALSE |

| BD1 | JW1 | 0.0403 | 2.9250 | 5.8097 | TRUE |

| BD1 | NNTOS1 | 2.4653 | 5.3500 | 8.2347 | TRUE |

| DCL1 | CRVRC3 | -2.8847 | 0.0000 | 2.8847 | FALSE |

| DCL1 | LYLC1 | -0.9597 | 1.9250 | 4.8097 | FALSE |

| DCL1 | JW2 | -0.4097 | 2.4750 | 5.3597 | FALSE |

| DCL1 | JW1 | -0.2347 | 2.6500 | 5.5347 | FALSE |

| DCL1 | NNTOS1 | 2.1903 | 5.0750 | 7.9597 | TRUE |

| CRVRC3 | LYLC1 | -0.9597 | 1.9250 | 4.8097 | FALSE |

| CRVRC3 | JW2 | -0.4097 | 2.4750 | 5.3597 | FALSE |

| CRVRC3 | JW1 | -0.2347 | 2.6500 | 5.5347 | FALSE |

| CRVRC3 | NNTOS1 | 2.1903 | 5.0750 | 7.9597 | TRUE |

| LYLC1 | JW2 | -2.3347 | 0.5500 | 3.4347 | FALSE |

| LYLC1 | JW1 | -2.1597 | 0.7250 | 3.6097 | FALSE |

| LYLC1 | NNTOS1 | 0.2653 | 3.1500 | 6.0347 | TRUE |

| JW2 | JW1 | -2.7097 | 0.1750 | 3.0597 | FALSE |

| JW2 | NNTOS1 | -0.2847 | 2.6000 | 5.4847 | FALSE |

| JW1 | NNTOS1 | -0.4597 | 2.4250 | 5.3097 | FALSE |

Task 2:Note Tracking (NT)

NT Mixed Set Overall Summary Results

This subtask is evaluated in two different ways. In the first setup , a returned note is assumed correct if its onset is within +-50ms of a ref note and its F0 is within +- quarter tone of the corresponding reference note, ignoring the returned offset values. In the second setup, on top of the above requirements, a correct returned note is required to have an offset value within 20% of the ref notes duration around the ref note`s offset, or within 50ms whichever is larger.

A total of 34 files were used in this subtask: 16 from woodwind recording, 8 from IAL quintet recording and 6 piano.

| AR5 | AR6 | CRVRC2 | CRVRC4 | DCL2 | DHP2 | JW3 | JW4 | LYLC2 | |

|---|---|---|---|---|---|---|---|---|---|

| Ave. F-Measure Onset-Offset | 0.3239 | 0.3265 | 0.1577 | 0.0975 | 0.2404 | 0.1934 | 0.0366 | 0.0377 | 0.1179 |

| Ave. F-Measure Onset Only | 0.5297 | 0.5314 | 0.3299 | 0.2091 | 0.4031 | 0.4099 | 0.2765 | 0.2737 | 0.2889 |

| Ave. F-Measure Chroma | 0.3327 | 0.3357 | 0.1748 | 0.1231 | 0.2851 | 0.2120 | 0.0570 | 0.0575 | 0.1387 |

| Ave. F-Measure Onset Only Chroma | 0.5391 | 0.5413 | 0.3623 | 0.2616 | 0.4702 | 0.4626 | 0.3629 | 0.3469 | 0.3495 |

Detailed Results

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.259 | 0.443 | 0.324 | 0.885 |

| AR6 | 0.261 | 0.447 | 0.327 | 0.884 |

| CRVRC2 | 0.197 | 0.136 | 0.158 | 0.848 |

| CRVRC4 | 0.111 | 0.089 | 0.097 | 0.809 |

| DCL2 | 0.178 | 0.402 | 0.240 | 0.882 |

| DHP2 | 0.154 | 0.270 | 0.193 | 0.842 |

| JW3 | 0.037 | 0.038 | 0.037 | 0.855 |

| JW4 | 0.040 | 0.037 | 0.038 | 0.849 |

| LYLC2 | 0.122 | 0.119 | 0.118 | 0.788 |

Detailed Chroma Results

Here, accuracy is assessed on chroma results (i.e. all F0's are mapped to a single octave before evaluating)

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.266 | 0.456 | 0.333 | 0.881 |

| AR6 | 0.268 | 0.460 | 0.336 | 0.879 |

| CRVRC2 | 0.218 | 0.151 | 0.175 | 0.848 |

| CRVRC4 | 0.140 | 0.112 | 0.123 | 0.804 |

| DCL2 | 0.210 | 0.479 | 0.285 | 0.875 |

| DHP2 | 0.170 | 0.295 | 0.212 | 0.837 |

| JW3 | 0.056 | 0.060 | 0.057 | 0.854 |

| JW4 | 0.059 | 0.058 | 0.058 | 0.846 |

| LYLC2 | 0.142 | 0.140 | 0.139 | 0.788 |

Results Based on Onset Only

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.425 | 0.723 | 0.530 | 0.727 |

| AR6 | 0.427 | 0.724 | 0.531 | 0.727 |

| CRVRC2 | 0.409 | 0.285 | 0.330 | 0.663 |

| CRVRC4 | 0.240 | 0.190 | 0.209 | 0.625 |

| DCL2 | 0.305 | 0.643 | 0.403 | 0.728 |

| DHP2 | 0.346 | 0.539 | 0.410 | 0.616 |

| JW3 | 0.293 | 0.274 | 0.276 | 0.481 |

| JW4 | 0.308 | 0.257 | 0.274 | 0.483 |

| LYLC2 | 0.306 | 0.282 | 0.289 | 0.555 |

Chroma Results Based on Onset Only

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.432 | 0.738 | 0.539 | 0.693 |

| AR6 | 0.435 | 0.739 | 0.541 | 0.692 |

| CRVRC2 | 0.451 | 0.313 | 0.362 | 0.649 |

| CRVRC4 | 0.299 | 0.238 | 0.262 | 0.607 |

| DCL2 | 0.356 | 0.750 | 0.470 | 0.690 |

| DHP2 | 0.389 | 0.612 | 0.463 | 0.582 |

| JW3 | 0.379 | 0.364 | 0.363 | 0.473 |

| JW4 | 0.386 | 0.329 | 0.347 | 0.478 |

| LYLC2 | 0.368 | 0.344 | 0.350 | 0.528 |

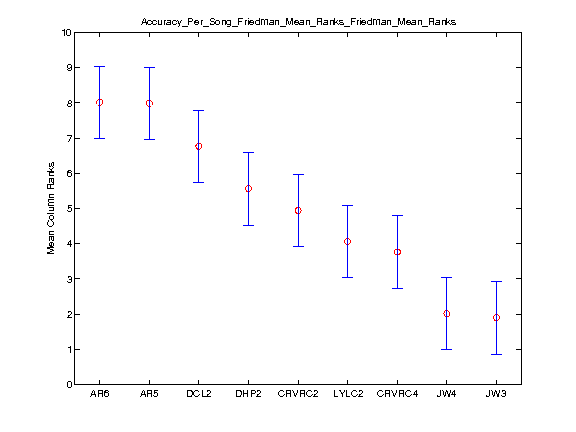

Friedman Tests for Note Tracking

The Friedman test was run in MATLAB to test significant differences amongst systems with regard to the F-measure on individual files.

Tukey-Kramer HSD Multi-Comparison for Task2

| TeamID | TeamID | Lowerbound | Mean | Upperbound | Significance |

|---|---|---|---|---|---|

| AR6 | AR5 | -2.0303 | 0.0294 | 2.0891 | FALSE |

| AR6 | DCL2 | -0.8097 | 1.2500 | 3.3097 | FALSE |

| AR6 | DHP2 | 0.3962 | 2.4559 | 4.5156 | TRUE |

| AR6 | CRVRC2 | 1.0138 | 3.0735 | 5.1332 | TRUE |

| AR6 | LYLC2 | 1.8962 | 3.9559 | 6.0156 | TRUE |

| AR6 | CRVRC4 | 2.1903 | 4.2500 | 6.3097 | TRUE |

| AR6 | JW4 | 3.9403 | 6.0000 | 8.0597 | TRUE |

| AR6 | JW3 | 4.0579 | 6.1176 | 8.1773 | TRUE |

| AR5 | DCL2 | -0.8391 | 1.2206 | 3.2803 | FALSE |

| AR5 | DHP2 | 0.3668 | 2.4265 | 4.4862 | TRUE |

| AR5 | CRVRC2 | 0.9844 | 3.0441 | 5.1038 | TRUE |

| AR5 | LYLC2 | 1.8668 | 3.9265 | 5.9862 | TRUE |

| AR5 | CRVRC4 | 2.1609 | 4.2206 | 6.2803 | TRUE |

| AR5 | JW4 | 3.9109 | 5.9706 | 8.0303 | TRUE |

| AR5 | JW3 | 4.0285 | 6.0882 | 8.1479 | TRUE |

| DCL2 | DHP2 | -0.8538 | 1.2059 | 3.2656 | FALSE |

| DCL2 | CRVRC2 | -0.2362 | 1.8235 | 3.8832 | FALSE |

| DCL2 | LYLC2 | 0.6462 | 2.7059 | 4.7656 | TRUE |

| DCL2 | CRVRC4 | 0.9403 | 3.0000 | 5.0597 | TRUE |

| DCL2 | JW4 | 2.6903 | 4.7500 | 6.8097 | TRUE |

| DCL2 | JW3 | 2.8079 | 4.8676 | 6.9273 | TRUE |

| DHP2 | CRVRC2 | -1.4421 | 0.6176 | 2.6773 | FALSE |

| DHP2 | LYLC2 | -0.5597 | 1.5000 | 3.5597 | FALSE |

| DHP2 | CRVRC4 | -0.2656 | 1.7941 | 3.8538 | FALSE |

| DHP2 | JW4 | 1.4844 | 3.5441 | 5.6038 | TRUE |

| DHP2 | JW3 | 1.6021 | 3.6618 | 5.7215 | TRUE |

| CRVRC2 | LYLC2 | -1.1773 | 0.8824 | 2.9421 | FALSE |

| CRVRC2 | CRVRC4 | -0.8832 | 1.1765 | 3.2362 | FALSE |

| CRVRC2 | JW4 | 0.8668 | 2.9265 | 4.9862 | TRUE |

| CRVRC2 | JW3 | 0.9844 | 3.0441 | 5.1038 | TRUE |

| LYLC2 | CRVRC4 | -1.7656 | 0.2941 | 2.3538 | FALSE |

| LYLC2 | JW4 | -0.0156 | 2.0441 | 4.1038 | FALSE |

| LYLC2 | JW3 | 0.1021 | 2.1618 | 4.2215 | TRUE |

| CRVRC4 | JW4 | -0.3097 | 1.7500 | 3.8097 | FALSE |

| CRVRC4 | JW3 | -0.1921 | 1.8676 | 3.9273 | FALSE |

| JW4 | JW3 | -1.9421 | 0.1176 | 2.1773 | FALSE |

NT Piano-Only Overall Summary Results

This subtask is evaluated in two different ways. In the first setup , a returned note is assumed correct if its onset is within +-50ms of a ref note and its F0 is within +- quarter tone of the corresponding reference note, ignoring the returned offset values. In the second setup, on top of the above requirements, a correct returned note is required to have an offset value within 20% of the ref notes duration around the ref note`s offset, or within 50ms whichever is larger. 6 piano recordings are evaluated separately for this subtask.

| AR5 | AR6 | CRVRC2 | CRVRC4 | DCL2 | DHP2 | JW3 | JW4 | LYLC2 | |

|---|---|---|---|---|---|---|---|---|---|

| Ave. F-Measure Onset-Offset | 0.2241 | 0.2289 | 0.2039 | 0.0912 | 0.1707 | 0.1176 | 0.0274 | 0.0220 | 0.1975 |

| Ave. F-Measure Onset Only | 0.5977 | 0.5969 | 0.4522 | 0.2419 | 0.5318 | 0.3414 | 0.3864 | 0.3809 | 0.5168 |

| Ave. F-Measure Chroma | 0.2049 | 0.2098 | 0.2164 | 0.1078 | 0.2225 | 0.1254 | 0.0299 | 0.0242 | 0.2069 |

| Ave. F-Measure Onset Only Chroma | 0.5486 | 0.5478 | 0.4758 | 0.2908 | 0.5526 | 0.3606 | 0.4042 | 0.4042 | 0.5265 |

Detailed Results

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.190 | 0.277 | 0.224 | 0.812 |

| AR6 | 0.195 | 0.282 | 0.229 | 0.809 |

| CRVRC2 | 0.268 | 0.165 | 0.204 | 0.795 |

| CRVRC4 | 0.103 | 0.082 | 0.091 | 0.821 |

| DCL2 | 0.138 | 0.235 | 0.171 | 0.816 |

| DHP2 | 0.101 | 0.147 | 0.118 | 0.782 |

| JW3 | 0.029 | 0.027 | 0.027 | 0.822 |

| JW4 | 0.025 | 0.020 | 0.022 | 0.793 |

| LYLC2 | 0.222 | 0.182 | 0.198 | 0.700 |

Detailed Chroma Results

Here, accuracy is assessed on chroma results (i.e. all F0's are mapped to a single octave before evaluating)

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.172 | 0.259 | 0.205 | 0.799 |

| AR6 | 0.177 | 0.264 | 0.210 | 0.794 |

| CRVRC2 | 0.284 | 0.175 | 0.216 | 0.800 |

| CRVRC4 | 0.122 | 0.097 | 0.108 | 0.817 |

| DCL2 | 0.177 | 0.314 | 0.223 | 0.776 |

| DHP2 | 0.106 | 0.161 | 0.125 | 0.776 |

| JW3 | 0.032 | 0.029 | 0.030 | 0.827 |

| JW4 | 0.028 | 0.022 | 0.024 | 0.788 |

| LYLC2 | 0.232 | 0.191 | 0.207 | 0.695 |

Results Based on Onset Only

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.497 | 0.785 | 0.598 | 0.556 |

| AR6 | 0.498 | 0.777 | 0.597 | 0.557 |

| CRVRC2 | 0.579 | 0.372 | 0.452 | 0.571 |

| CRVRC4 | 0.271 | 0.220 | 0.242 | 0.545 |

| DCL2 | 0.425 | 0.738 | 0.532 | 0.564 |

| DHP2 | 0.287 | 0.445 | 0.341 | 0.520 |

| JW3 | 0.439 | 0.362 | 0.386 | 0.369 |

| JW4 | 0.456 | 0.341 | 0.381 | 0.365 |

| LYLC2 | 0.575 | 0.480 | 0.517 | 0.495 |

Chroma Results Based on Onset Only

| Precision | Recall | Ave. F-measure | Ave. Overlap | |

|---|---|---|---|---|

| AR5 | 0.454 | 0.728 | 0.549 | 0.548 |

| AR6 | 0.456 | 0.719 | 0.548 | 0.550 |

| CRVRC2 | 0.609 | 0.391 | 0.476 | 0.567 |

| CRVRC4 | 0.325 | 0.265 | 0.291 | 0.520 |

| DCL2 | 0.441 | 0.768 | 0.553 | 0.552 |

| DHP2 | 0.301 | 0.474 | 0.361 | 0.504 |

| JW3 | 0.459 | 0.379 | 0.404 | 0.364 |

| JW4 | 0.483 | 0.362 | 0.404 | 0.360 |

| LYLC2 | 0.585 | 0.488 | 0.526 | 0.494 |

Individual Results Files for Task 2

AR5 = Chunghsin Yeh, Axel Roebel

AR6 = Chunghsin Yeh, Axel Roebel

CRCRV2 = F. Quesada et. al.

CRCRV4 = F. Quesada et. al.

DCL2 = Arnaud Dessein, Arshia Cont, Guillaume Lemaitre

DHP2 = Zhiyao Duan, Jinyu Han, Bryan Pardo

JW3 = Jun Wu et. al.

JW4 = Jun Wu et. al.

LYLC2 = C. T. Lee, et. al.

Task 3 Instrument Tracking

Same dataset was used as in Task1. The evaluations were performed by first one-to-one matching the detected contours to the ground-truth contours. This is done by selecting the best scoring duo`s of detected and ground-truth contours. If there are extra detected contours that are not matched to any of the ground-truth contours, all the returned F0`s in those contours are added to false positives. If there are extra ground-truth contours that are not matched to any detected contours, all the F0`s in the ground-truth contours are added to false negatives.

MF0It Detailed Results

| Precision | Recall | Accuracy | F-measure | |

|---|---|---|---|---|

| DHP3 | 0.413 | 0.293 | 0.208 | 0.339 |

Detailed Chroma Results

Here, accuracy is assessed on chroma results (i.e. all F0's are mapped to a single octave before evaluating)

| Precision | Recall | Accuracy | F-measure | |

|---|---|---|---|---|

| DHP3 | 0.457 | 0.314 | 0.230 | 0.368 |